Service discovery at Stripe

With so many new technologies coming out every year (like Kubernetes or Habitat), it’s easy to become so entangled in our excitement about the future that we forget to pay homage to the tools that have been quietly supporting our production environments. One such tool we've been using at Stripe for several years now is Consul. Consul helps discover services (that is, it helps us navigate the thousands of servers we run with various services running on them and tells us which ones are up and available for use). This effective and practical architectural choice wasn't flashy or entirely novel, but has served us dutifully in our continued mission to provide reliable service to our users around the world.

We’re going to talk about:

- What service discovery and Consul are,

- How we managed the risks of deploying a critical piece of software,

- The challenges we ran into along the way and what we did about them.

You don’t just set up new software and expect it to magically work and solve all your problems—using new software is a process. This is an example of what the process of using new software in production has looked like for us.

What’s service discovery?

Great question! Suppose you’re a load balancer for Stripe, and a request to create a charge has come in. You want to send it to an API server. Any API server!

We run thousands of servers with various services running on them. Which ones are the API servers? What port is the API running on? One amazing thing about using AWS is that our instances can go down at any time, so we need to be prepared to:

- Lose API servers at any time,

- Add extra servers to the rotation if we need additional capacity.

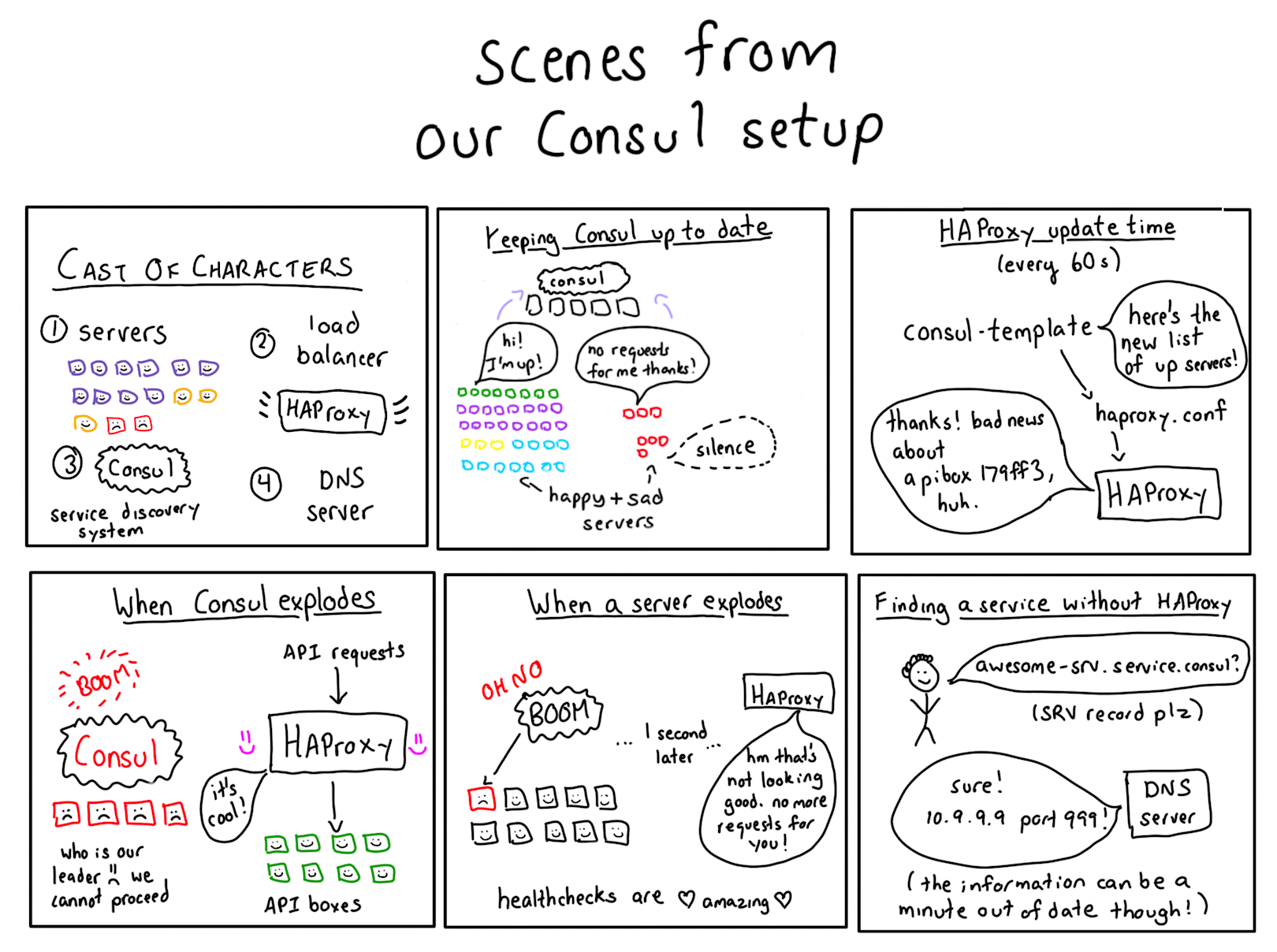

This problem of tracking changes around which boxes are available is called service discovery. We use a tool called Consul from HashiCorp to do service discovery.

The fact that our instances can go down at any time is actually very helpful—our infrastructure gets regular practice losing instances and dealing with it automatically, so when it happens it’s just business as usual. It’s easier to handle failure gracefully when failure happens often.

Introduction to Consul

Consul is a service discovery tool: it lets services register themselves and to discover other services. It stores which services are up in a database, has client software that puts information in that database, and other client software that reads from that database. There are a lot of pieces to wrap your head around!

The most important component of Consul is the database. This database contains entries like “api-service is running at IP 10.99.99.99 at port 12345. It is up.”

Individual boxes publish information to Consul saying “Hi! I am running api-service on port 12345! I am up!”.

Then if you want to talk to the API service, you can ask Consul “Which api-services are up?”. It will give you back a list of IP addresses and ports you can talk to.

Consul is a distributed system itself (remember: we can lose any box at any time, which means we couldlose the Consul server itself!) so it uses a consensus algorithm called Raft to keep its database in sync.

If you’re interested in consensus in Consul, read more here.

The beginning of Consul at Stripe

We started out by only writing to Consul—having machines report whether or not they were up to the Consul server, but not using that information to do service discovery. We wrote some Puppet configuration to set it up, which wasn’t that hard!

This way we could uncover potential issues with running the Consul client and get experience operating it on thousands of machines. At first, no services were being discovered with Consul.

What could go wrong?

Addressing memory leaks

If you add a new piece of software to every box in your infrastructure, that software could definitely go wrong! Early on we ran into memory leaks in Consul’s stats library: we noticed that one box was taking over 100MB of RAM and climbing. This was a bug in Consul, which got fixed.

100MB of memory is not a big leak, but the leak was growing quickly. Memory leaks in general are worrisome because they're one of the easiest ways for one process on a box to Totally Ruin Things for other processes on the box.

Good thing we decided not to use Consul to discover services to start! Letting it sit on a bunch of production machines and monitoring memory usage let us find out about a potentially serious problem quickly with no negative impact.

Starting to discover services with Consul

Once we were more confident that running Consul in our infrastructure would work, we started adding a few clients to talk to Consul! We made this less risky in 2 ways:

- Only use Consul in a few places to start,

- Keep a fallback system in place so that we could function during outages.

Here are some of the issues we ran into. We’re not listing these to complain about Consul, but rather to emphasize that when using new technology, it’s important to roll it out slowly and be cautious.

A ton of Raft failovers. Remember that Consul uses a consensus protocol? This copies all the data on one server in the Consul cluster to other servers in that cluster. The primary server was having a ton of problems with disk I/O—the disks weren’t fast enough to do the reads that Consul wanted to do, and the whole primary server would hang. Then Raft would say “oh, the primary is down!” and elect a new primary, and the cycle would repeat. While Consul was busy electing a new primary, it would not let anybody write anything or read anything from its database (because consistent reads are the default).

Version 0.3 broke SSL completely. We were using Consul’s SSL feature (technically, TLS) for our Consul nodes to communicate securely. One Consul release just broke it. We patched it.This is an example of a kind of issue that isn’t that difficult to detect or scary (we tested in QA, realized SSL was broken, and just didn’t roll out the release), but is pretty common when using early-stage software.

Goroutine leaks. We started using Consul’s leader election and there was a goroutine leak that caused Consul to quickly eat all the memory on the box. The Consul team was really helpful in debugging this and we fixed a bunch of memory leaks (different memory leaks from before).

Once all of these were fixed, we were in a much better place. Getting from “our first Consul client” to “we’ve fixed all these issues in production” took a bit less than a year of background work cycles.

Scaling Consul to discover which services are up

So, we’d learned about a bunch of bugs in Consul, and had them fixed, and everything was operating much better. Remember that step we talked about at the beginning, though? Where you ask Consul “hey, what boxes are up for api-service?” We were having intermittent problems where the Consul server would respond slowly or not at all.

This was mostly during raft failovers or instability; because Consul uses a strongly-consistent store its availability will always be weaker than something that doesn't. It was especially rough in the early days.

We still had fallbacks, but Consul outages became pretty painful for us. We would fall back to a hardcoded set of DNS names (like “apibox1”) when Consul was down. This worked okay when we first rolled out Consul, but as we scaled and used Consul more widely, it became less and less viable.

Consul Template to the rescue

Asking Consul which services were up (via its HTTP API) was unreliable. But we were happy with it otherwise!

We wanted to get information out of Consul about which services were up without using its API. How?

Well, Consul would take a name (like monkey-srv) and translate it into one or several IP addresses (“this is where monkey-srv lives”). Know what else takes in names and outputs IP address? A DNS server! So we replaced Consul with a DNS server. Here’s how: Consul Template is a Go program that generates static configuration files from your Consul database.

We started using Consul Template to generate DNS records for Consul services. So if monkey-srv was running on IP 10.99.99.99, we’d generate a DNS record:

Here’s what that looks like in code. You can also find our real Consul Template configuration which is a little more complicated.

If you're thinking "wait, DNS records only have an IP address, you also need to know which port the server is running on," you are right! DNS A records (the kind you normally see) only have an IP address in them. However, DNS SRV records can have ports in them, and we also use Consul Template to generate SRV records.

We run Consul Template in a cron job every 60 seconds. Consul Template also has a “watch” mode (the default) which continuously updates configuration files when its database is updated. When we tried the watch mode, it DOSed our Consul server, so we stopped using it.

So if our Consul server goes down, our internal DNS server still has all the records! They might be a little old, but that’s fine. What’s awesome about our DNS server is that it’s not a fancy distributed system, which means it’s a much simpler piece of software, and much less likely to spontaneously break. This means that I can just look up monkey-srv.service.consul get an IP, and use it to talk to my service!

Because DNS is a shared-nothing eventually consistent system we can replicate and cache it a bunch (we have 5 canonical DNS servers and every server has a local DNS cache and knows how to talk to any of the 5 canonical servers) so it's fundamentally more resilient than Consul.

Adding a load balancer for faster healthchecks

We just said that we update DNS records from Consul every 60 seconds. So, what happens if an API server explodes? Do we keep sending requests to that IP for 45 more seconds until the DNS server gets updated? We do not! There’s one more piece of the story: HAProxy.

HAProxy is a load balancer. If you give a healthcheck for the service it’s sending requests to, it can make sure that your backends are up! All of our API requests actually go through HAProxy. Here’s how it works:

- Every 60 seconds, Consul Template writes an HAProxy configuration file.

- This means that HAProxy always has an approximately correct set of backends.

- If a machine goes down, HAProxy realizes quickly that something has gone wrong (since it runs healthchecks every 2 seconds).

This means we restart HAProxy every 60 seconds. But does that mean we drop connections when we restart HAProxy? No. To avoid dropping connections between restarts, we use HAProxy’s graceful restarts feature. It’s still possible to drop some traffic with this restart policy, as described here, but we don’t process enough traffic that it’s an issue.

We have a standard healthcheck endpoint for our services—almost every service has a /healthcheck endpoint that returns 200 if it’s up and and errors if not. Having a standard is important because it means we can easily configure HAProxy to check service health.

When Consul is down, HAProxy will just have a stale configuration file, which will keep working.

Trading consistency for availability

If you’ve been paying close attention, you’ll notice that the system we started with (a strongly consistent database which was guaranteed to have the latest state) was very different from the the system we finished with (a DNS server which could be up to a minute behind). Giving up our requirement for consistency let us have a much more available system—Consul outages have basically no effect on our ability to discover services.

An important lesson from this is that consistency doesn’t come for free! You have to be willing to pay the price in availability, and so if you’re going to be using a strongly consistent system it’s important to make sure that’s something you actually need.

What happens when you make a request

We covered a lot in this post, so let’s go through the request flow now that we’ve learned how it all works.

When you make a request for https://stripe.com/, what happens? How does it end up at the right server? Here’s a simplified explanation:

- It comes into one of our public load balancers, running HAProxy,

- Consul Template has populated a list of servers serving stripe.com in the

/etc/haproxy.confconfiguration file, - HAProxy reloads this configuration file every 60 seconds,

- HAProxy sends your request on to a stripe.com server! It makes sure that the server is up.

It’s actually a little more complicated than that (there’s actually an extra layer, and Stripe API requests are even more complicated, because we have systems to deal with PCI compliance), but all the core ideas are there.

This means that when we bring up or take down servers, Consul takes care of removing them from the HAProxy rotation automatically. There’s no manual work to do.

More than a year of peace

There are a lot of areas we’re looking forward to improving in our approach to service discovery. It’s a space with loads of active development and we see some elegant opportunities for integrating our scheduling and request routing infrastructure in the near future.

However, one of the important lessons we’ve taken away is that simple approaches are often the right ones. This system has been working for us reliably for more than a year without any incidents. Stripe doesn’t process anywhere near as many requests as Twitter or Facebook, but we do care a very great deal about extreme reliability. Sometimes the best wins come from deploying a stable, excellent solution instead of a novel one.