Learning to operate Kubernetes reliably

We recently built a distributed cron job scheduling system on top of Kubernetes, an exciting new platform for container orchestration. Kubernetes is very popular right now and makes a lot of exciting promises: one of the most exciting is that engineers don’t need to know or care what machines their applications run on.

Distributed systems are really hard, and managing services on distributed systems is one of the hardest problems operations teams face. Breaking in new software in production and learning how to operate it reliably is something we take very seriously. As an example of why learning to operate Kubernetes is important (and why it's hard!), here's a fantastic postmortem of a one-hour outage caused by a bug in Kubernetes.

In this post, we'll explain why we chose to build on top of Kubernetes. We’ll examine how we integrated Kubernetes into our existing infrastructure, our approach to building confidence in (and improving) our Kubernetes' cluster's reliability, and the abstractions we’ve built on top of Kubernetes.

What's Kubernetes?

Kubernetes is a distributed system for scheduling programs to run in a cluster. You can tell Kubernetes to run five copies of a program, and it'll dynamically schedule them on your worker nodes. Containers are automatically scheduled to increase utilization and save money, powerful deployment primitives allow you to gradually roll out new code, and Security Contexts and Network Policies allow you to run multi-tenant workloads in a secure way.

Kubernetes has a lot of different kinds of scheduling capabilities built into it. It can schedule long-running HTTP services, daemonsets that run on every machine in your cluster, cron jobs that run every hour, and more. There's a lot more to Kubernetes. If you want to know more, Kelsey Hightower has given a lot of excellent talks: Kubernetes for sysadmins and healthz: Stop reverse engineering applications and start monitoring from the inside are two nice starting points. There’s also a great, supportive community on Slack.

Why Kubernetes?

Every infrastructure project (hopefully!) starts with a business need, and our goal was to improve the reliability and security of an existing distributed cron job system we had. Our requirements were:

- We needed to be able to build and operate it with a relatively small team (only 2 people were working full time on the project.)

- We needed to schedule about 500 different cron jobs across around 20 machines reliably.

Here are a few reasons we decided to build on top of Kubernetes:

- We wanted to build on top of an existing open-source project.

- Kubernetes includes a distributed cron job scheduler, so we wouldn't have to write one ourselves.

- Kubernetes is a very active project and regularly accepts contributions. - Kubernetes is written in Go, which is easy to learn. Almost all of our Kubernetes bugfixes were made by inexperienced Go programmers on our team.

- If we could successfully operate Kubernetes, we could build on top of Kubernetes in the future (for example, we're currently working on a Kubernetes-based system to train machine learning models.)

We'd previously been using Chronos as a cron job scheduling system, but it was no longer meeting our reliability requirements and it's mostly unmaintained (1 commit in the last 9 months, and the last time a pull request was merged was March 2016) Because Chronos is unmaintained, we decided it wasn't worth continuing to invest in improving our existing cluster.

If you’re considering Kubernetes, keep in mind: don't use Kubernetes just because other companies are using it. Setting up a reliable cluster takes a huge amount of time, and the business case for using it isn't always obvious. Invest your time in a smart way.

What does reliable mean?

When it comes to operating services, the word reliable isn't meaningful on its own. To talk about reliability, you first need to establish a SLO (service level objective).

We had three primary goals:

- 99.99% of cron jobs should get scheduled and start running within 20 minutes of their scheduled run time. 20 minutes is a pretty wide window, but we interviewed our internal customers and none of them asked for higher precision.

- Jobs should run to completion 99.99% of the time (without being terminated).

- Our migration to Kubernetes shouldn't cause any customer-facing incidents.

This meant a few things:

- Short periods of downtime in the Kubernetes API are acceptable (if it's down for ten minutes, it's ok as long as we can recover within five minutes.)

- Scheduling bugs (where a cron job run gets dropped completely and fails to run at all) are not acceptable. We took reports of scheduling bugs extremely seriously.

- We needed to be careful about pod evictions and terminating instances safely so that jobs didn't get terminated too frequently.

- We needed a good migration plan.

Building a Kubernetes cluster

Our basic approach to setting up our first Kubernetes cluster was to build the cluster from scratch instead of using a tool like kubeadm or kops (using Kubernetes The Hard Way as a reference). We provisioned our configuration with Puppet, our usual configuration management tool. Building from scratch was great for two reasons: we were able to deeply integrate Kubernetes in our architecture, and we developed a deep understanding of its internals.

Building from scratch let us integrate Kubernetes into our existing infrastructure. We wanted seamless integration with our existing systems for logging, certificate management, secrets, network security, monitoring, AWS instance management, deployment, database proxies, internal DNS servers, configuration management, and more. Integrating all those systems sometimes required a little creativity, but overall was easier than trying to shoehorn kubeadm/kops into doing what we wanted.

We already trust and know how to operate all those existing systems, so we wanted to keep using them in our new Kubernetes cluster. For example, secure certificate management is a very hard problem, and we already have a way to issue and manage certificates. We were able to avoid creating a new CA just for Kubernetes with a proper integration.

We were forced to understand exactly how the parameters we were setting affected our Kubernetes setup. For example, there are over a dozen parameters used when configuring the certificates/CAs used for authentication. Understanding all of those parameters made it way easier to debug our setup when we ran into issues with authentication.

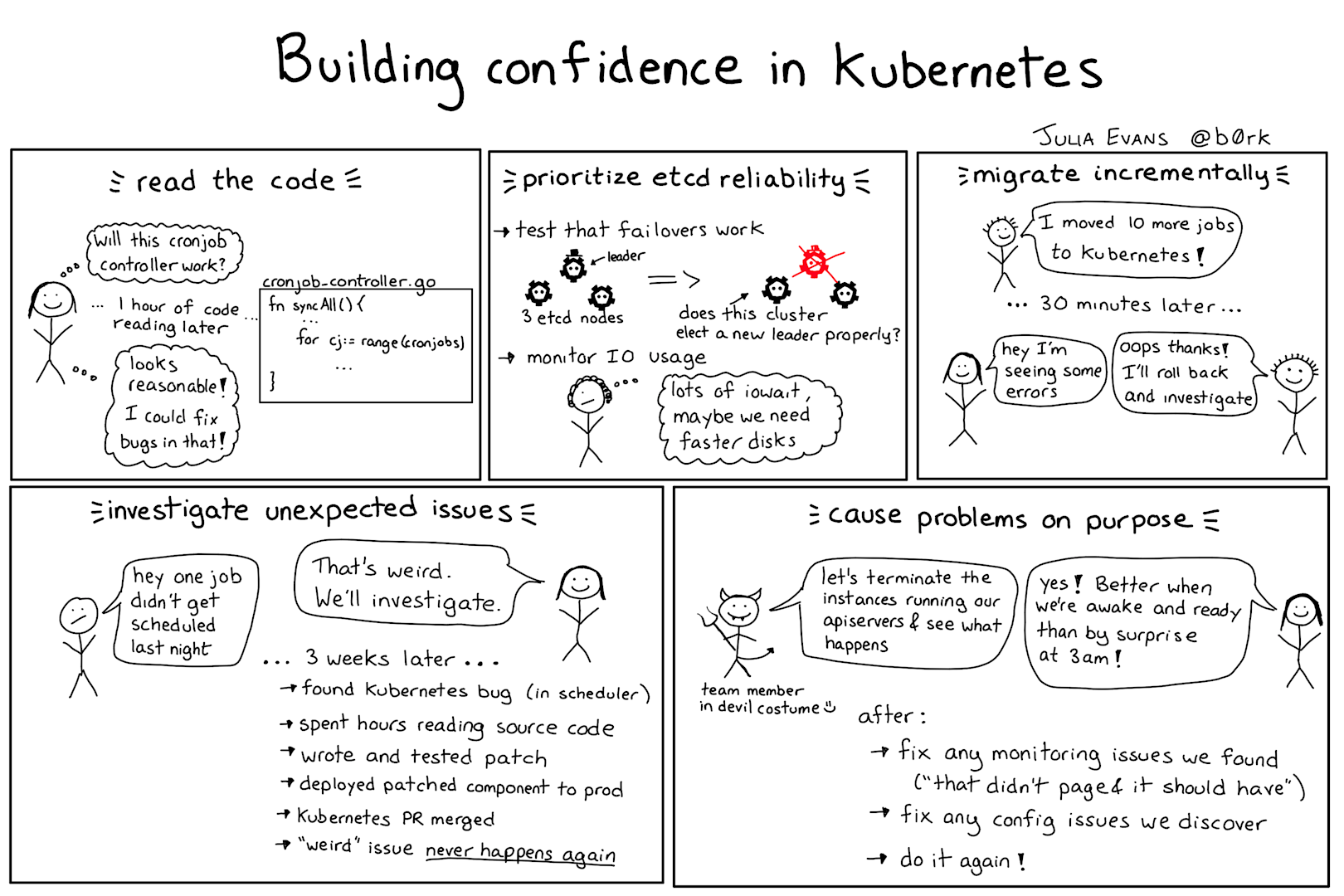

Building confidence in Kubernetes

At the beginning of our Kubernetes work, nobody on the team had ever used Kubernetes before (except in some cases for toy projects). How do you get from "None of us have ever used Kubernetes" to "We're confident running Kubernetes in production"?

Strategy 0: Talk to other companies

We asked a few folks at other companies about their experiences with Kubernetes. They were all using Kubernetes in different ways or on different environments (to run HTTP services, on bare metal, on Google Kubernetes Engine, etc).

Especially when talking about a large and complicated system like Kubernetes, it's important to think critically about your own use cases, do your own experiments, build confidence in your own environment, and make your own decisions. For example, you should not read this blog post and conclude "Well, Stripe is using Kubernetes successfully, so it will work for us too!"

Here’s what we learned after conversations with several companies operating Kubernetes clusters:

- Prioritize working on your etcd cluster's reliability (etcd is where all of your Kubernetes cluster's state is stored.)

- Some Kubernetes features are more stable than others, so be cautious of alpha features. Some companies only use stable features after they’ve been stable for more than one release (e.g. if a feature became stable in 1.8, they'd wait for 1.9 or 1.10 before using it.)

- Consider using a hosted Kubernetes system like GKE/AKS/EKS. Setting up a high-availability Kubernetes system yourself from scratch is a huge amount of work. AWS didn't have a managed Kubernetes service during this project so this wasn't an option for us.

- Be careful about the additional network latency introduced by overlay networks / software defined networking.

Talking to other companies of course didn't give us a clear answer on whether Kubernetes would work for us, but it did give us questions to ask and things to be cautious about.

Strategy 1: Read the code

We were planning to depend quite heavily on one component of Kubernetes,the cronjob controller. This component was in alpha at the time, which made us a little worried. We'd tried it out in a test cluster, but how could we tell whether it would work for us in production?

Thankfully, all of the cron job controller's core functionality is just 400 lines of Go. Reading through the source code quickly showed that:

- The cron job controller is a stateless service (like every other Kubernetes component, except etcd).

- Every ten seconds, this controller calls the syncAll function:

go wait.Until(jm.syncAll, 10*time.Second, stopCh) - The

syncAllfunction fetches all cron jobs from the Kubernetes API, iterates through that list, determines which jobs should next run, then starts those jobs.

The core logic seemed relatively easy to understand. More importantly, we felt like if there was a bug in this controller, it was probably something we could fix ourselves.

Strategy 2: Do load testing

Before we started building the cluster in earnest, we did a little bit of load testing. We weren't worried about how many nodes the Kubernetes cluster could handle (we were planning to deploy around 20 nodes), but we did want to make certain Kubernetes could handle running as many cron jobs as we wanted to run (about 50 per minute).

We ran a test in a 3-node cluster where we created 1,000 cron jobs that each ran every minute. Each of these jobs simply ran bash -c 'echo hello world'. We chose simple jobs because we wanted to test the scheduling and orchestration abilities of the cluster, not the cluster's total compute capacity.

Our test cluster could not handle 1,000 cron jobs per minute. We observed that every node would only start at most one pod per second, and the cluster was able to run 200 cron jobs per minute without issue. Since we only wanted to run approximately 50 cron jobs per minute, we decided these limits weren't a blocker (and that we could figure them out later if required). Onwards!

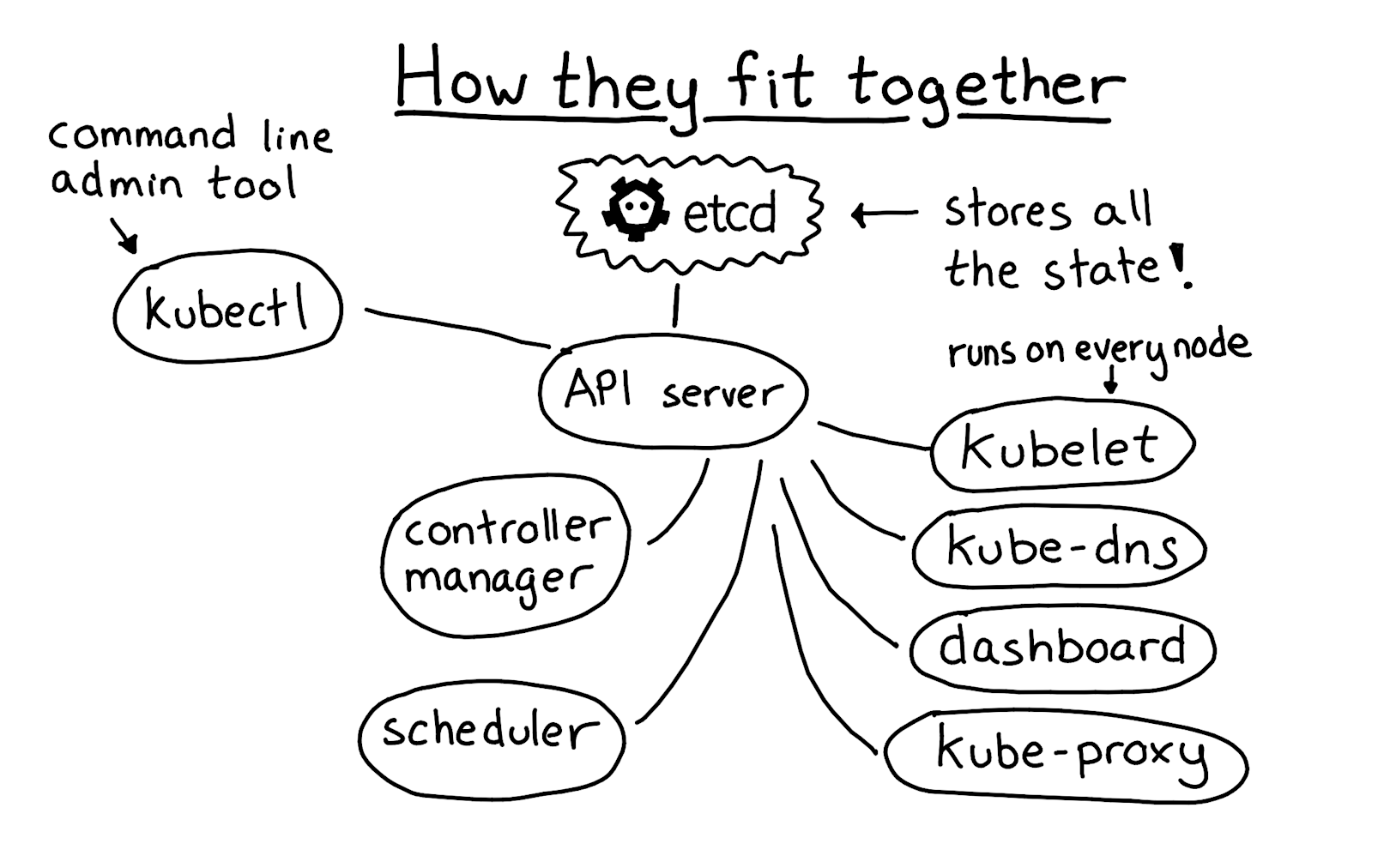

Strategy 3: Prioritize building and testing a high availability etcd cluster

One of the most important things to get right when setting up Kubernetes is running etcd. Etcd is the heart of your Kubernetes cluster---it's where all of the data about everything in your cluster is stored. Everything other than etcd is stateless. If etcd isn't running, you can't make any changes to your Kubernetes cluster (though existing services will continue running!).

This diagram shows how etcd is the heart of your Kubernetes cluster---the API server is a stateless REST/authentication endpoint in front of etcd, and then every other component works by talking to etcd through the API server.

When running, there are two important points to keep in mind:

- Set up replication so that your cluster doesn't die if you lose a node. We have three etcd replicas right now.

- Make sure you have enough I/O bandwidth available. Our version of etcd had an issue where one node with high fsync latency could trigger continuous leader elections, causing unavailability on our cluster. We remediated this by ensuring that all of our nodes had more I/O bandwidth than the number of writes etcd was performing.

Setting up replication isn't a set-and-forget operation. We carefully tested that we could actually lose an etcd node, and that the cluster gracefully recovered.

Here's some of the work we did to set up our etcd cluster:

- Set up replication

- Monitor that the etcd service is available (if etcd is down, we want to know right away)

- Write some simple tooling so we could easily spin up new etcd nodes and join them to the cluster

- Patch etcd's Consul integration so that we could run more than 1 etcd cluster in our production environment

- Test recovering from an etcd backup

- Test that we could rebuild the whole cluster without downtime

We were happy that we did this testing pretty early on. One Friday morning in our production cluster, one of our etcd nodes stopped responding to ping. We got alerted about it, terminated the node, brought up a new one, joined it to the cluster, and in the meantime Kubernetes continued running without incident. Fantastic.

Strategy 4: Incrementally migrate jobs to Kubernetes

One of our major goals was to migrate our jobs to Kubernetes without causing any outages. The secret to running a successful production migrations is not to avoid making any mistakes (that's impossible), but to design your migration to reduce the impact of mistakes.

We were lucky to have a wide variety of jobs to migrate to our new cluster, so there were some low-impact jobs we could migrate where one or two failures were acceptable.

Before starting the migration, we built easy-to-use tooling that would let us move jobs back and forth between the old and new systems in less than five minutes if necessary. This easy tooling reduced the impact of mistakes---if we moved over a job that had a dependency we hadn't planned for, no big deal! We could just move it back, fix the issue, and try again later.

Here's the overall migration strategy we took:

- Roughly order the jobs in terms of how critical they were

- Repeatedly move some jobs over to Kubernetes. If there's a new edge case we discover, quickly rollback, fix the issue, and try again.

Strategy 5: Investigate Kubernetes bugs (and fix them)

We set out a rule at the beginning of the project: if Kubernetes does something surprising or unexpected, we have to investigate, figure out why, and come up with a remediation.

Investigating each issue is time consuming, but very important. If we simply dismissed flaky and strange behaviour in Kubernetes as a function of how complex distributed systems can become, we’d feel afraid of being on call for the resulting buggy cluster.

After taking this approach, we discovered (and were able to fix!) several bugs in Kubernetes.

Here are some kinds of issues that we found during these tests:

- Cronjobs with names longer than 52 characters silently fail to schedule jobs (fixed here).

- Pods would sometimes get stuck in the Pending state forever (fixed here and here).

- The scheduler would crash every 3 hours (fixed here).

- Flannel's hostgw backend didn't replace outdated route table entries (fixed here).

Fixing these bugs made us feel much better about our use of the Kubernetes project---not only did it work relatively well, but they also accept patches and have a good PR review process.

Kubernetes definitely has bugs, like all software. In particular, we use the scheduler very heavily (because our cron jobs are constantly creating new pods), and the scheduler's use of caching sometimes results in bugs, regressions, and crashes. Caching is hard! But the codebase is approachable and we've been able to handle the bugs we encountered.

One other issue worth mentioning is Kubernetes' pod eviction logic. Kubernetes has a component called the node controller which is responsible for evicting pods and moving them to another node if a node becomes unresponsive. It's possible for all nodes to temporarily become unresponsive (e.g. due to a networking or configuration issue), and in that case Kubernetes can terminate all pods in the cluster. This happened to us relatively early on in our testing.

If you're running a large Kubernetes cluster, carefully read through the node controller documentation, think through the settings carefully, and test extensively. Every time we've tested a configuration change to these settings (e.g. --pod-eviction-timeout) by creating network partitions, surprising things have happened. It's always better to discover these surprises in testing rather than at 3am in production.

Strategy 6: Intentionally cause Kubernetes cluster issues

We've discussed running game day exercises at Stripe before, and it's something we still do very frequently. The idea is to come up with situations you expect to eventually happen in production (e.g. losing a Kubernetes API server) and then intentionally cause those situations in production (during the work day, with warning) to ensure that you can handle them.

After running several exercises on our cluster, they often revealed issues like gaps in monitoring or configuration errors. We were very happy to discover those issues early on in a controlled fashion rather than by surprise six months later.

Here are a few of the game day exercises we ran:

- Terminate one Kubernetes API server

- Terminate all the Kubernetes API servers and bring them back up (to our surprise, this worked very well)

- Terminate an etcd node

- Cut off worker nodes in our Kubernetes cluster from the API servers (so that they can't communicate). This resulted in all pods on those nodes being moved to other nodes.

We were really pleased to see how well Kubernetes responded to a lot of the disruptions we threw at it. Kubernetes is designed to be resilient to errors---it has one etcd cluster storing all the state, an API server which is simply a REST interface to that database, and a collection of stateless controllers" that coordinate all cluster management.

If any of the Kubernetes core components (the API server, controller manager, or scheduler) are interrupted or restarted, once they come up they read the relevant state from etcd and continue operating seamlessly. This was one of the things we hoped would be true, and has actually worked very well in practice.

Here are some kinds of issues that we found during these tests:

- "Weird, I didn't get paged for that, that really should have paged. Let's fix our monitoring there."

- "When we destroyed our API server instances and brought them back up, they required human intervention. We’d better fix that."

- "Sometimes when we do an etcd failover, the API server starts timing out requests until we restart it."

After running these tests, we developed remediations for the issues we found: we improved monitoring, fixed configuration issues we'd discovered, and filed bugs with Kubernetes.

Making cron jobs easy to use

Let's briefly explore how we made our Kubernetes-based system easy to use.

Our original goal was to design a system for running cron jobs that our team was confident operating and maintaining. Once we had established our confidence in Kubernetes, we needed to make it easy for our fellow engineers to configure and add new cron jobs. We developed a simple YAML configuration format so that our users didn't need to understand anything about Kubernetes’ internals to use the system. Here's the format we developed:

We didn't do anything very fancy here---we wrote a simple program to take this format and translate it into Kubernetes cron job configurations that we apply with kubectl.

We also wrote a test suite to ensure that job names aren't too long (Kubernetes cron job names can't be more than 52 characters) and that all names are unique. We don't currently use cgroups to enforce memory limits on most of our jobs, but it's something we plan to roll out in the future.

Our simple format was easy to use, and since we automatically generated both Chronos and Kubernetes cron job definitions from the same format, moving a job between either system was really easy. This was a key part of making our incremental migration work well. Whenever moving a job to Kubernetes caused issues, we could move it back with a simple three-line configuration change and in less than ten minutes.

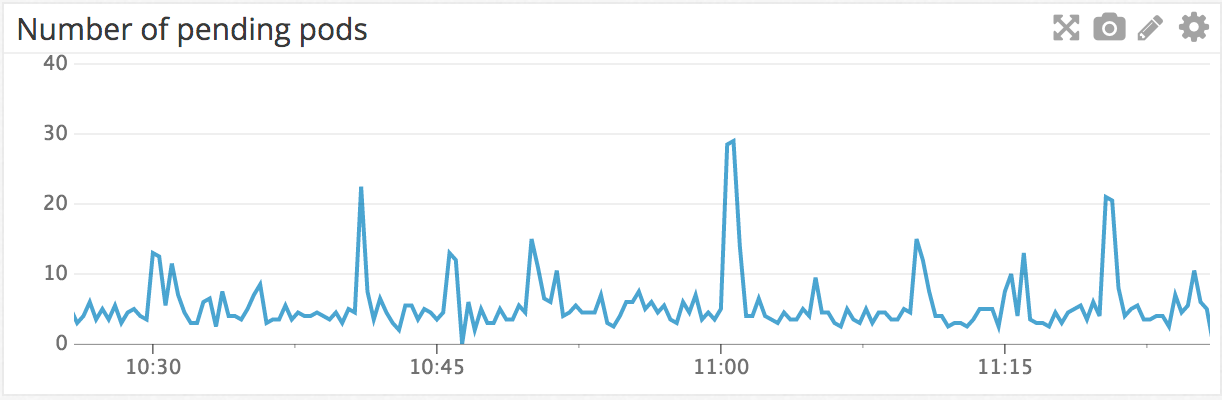

Monitoring Kubernetes

Monitoring our Kubernetes cluster’s internal state has proven to be very pleasant. We use the kube-state-metrics package for monitoring and a small Go program called veneur-prometheus to scrape the Prometheus metrics kube-state-metrics emits and publish them as statsd metrics to our monitoring system.

For example, here's a chart of the number of pending pods in our cluster over the last hour. Pending means that they're waiting to be assigned a worker node to run on. You can see that the number spikes at 11am, because a lot of our cron jobs run at the 0th minute of the hour.

We also have a monitor that checks that no pods are stuck in the Pending state---we check that every pod starts running on a worker node within 5 minutes, or we otherwise receive an alert.

Future plans for Kubernetes

Setting up Kubernetes, getting to a place where we were comfortable running production code and migrating all our cron jobs to the new cluster took us five months with three engineers working full time. One big reason we invested in learning Kubernetes is we expect to be able to use Kubernetes more widely at Stripe.

Here are some principles that apply to operating Kubernetes (or any other complex distributed system):

- Define a clear business reason for your Kubernetes projects (and all infrastructure projects!). Understanding the business case and the needs of our users made our project significantly easier.

- Aggressively cut scope. We decided to avoid using many of Kubernetes’ basic features to simplify our cluster. This let us ship more quickly---for example, since pod-to-pod networking wasn't a requirement for our project, we could firewall off all network connections between nodes and defer thinking about network security in Kubernetes to a future project.

- Invest a lot of time into learning how to properly operate a Kubernetes cluster. Test edge cases carefully. Distributed systems are extremely complicated and there's a lot of potential for things to go wrong. Take the example we described earlier: the node controller can kill all pods in your cluster if they lose contact with API servers, depending on your configuration. Learning how Kubernetes behaves after each configuration change takes time and careful focus.

By staying focused on these principles, we've been able to use Kubernetes in production with confidence. We'll continue to grow and evolve how we use Kubernetes over time---for example, we're watching AWS's release of EKS with interest. We're finishing work on another system to train machine learning models and are also exploring moving some HTTP services to Kubernetes. As we continue operating Kubernetes in production, we plan to contribute to the open-source project along the way.