许多公司都旨在以客户为中心,但如果不制定切实可行的方法将这种理念付诸实践,就很难兑现这一承诺。一些组织会定期致电客户或进行实地拜访。另一些组织则会组织焦点小组讨论,以了解客户以及客户的客户。还有一些公司会聘请客户,将他们的理念融入公司。

Stripe 员工与用户建立联系,始终保持以用户为中心。在我们的产品发布前,就有超过 1000 名开发者注册,当时我们便秉持这一做法;如今,我们在全球为超过 10 万家企业提供支持,依然如此。这与其说是一项政策,不如说是一种运营理念。在线调查是我们让所有 Stripe 员工与用户建立联系的方式之一,我们也鼓励大家积极行动。

长期以来,调查一直是快速获取有用客户反馈的渠道。通过调查,我们可以了解用户需求、痛点和动机,从而打造更好的产品并锁定正确的市场。但一份出色的调查看似简单,实则不然,一不小心就可能创建一个让用户感到沮丧,同时又让团队和您都一头雾水的调查模板。

本指南旨在分享 Stripe 用于构建清晰、直观和吸引人的调查问卷的主要原则。这些调查问卷最佳实践帮助我们将调查问卷的回复率和完成率提高了两倍多。此外,我们还获得了改进产品开发、改善内部运营的真知灼见,最重要的是,这些真知灼见促进了我们与用户之间的紧密联系。

调查的魅力所在

想一想您曾经做过的一项令人印象深刻的调查。

如果您想到了一个,那大概率并不是因为它值得称赞。一项出色的调查就像其他一流的用户体验一样,能减少摩擦,让人感觉自然而然、毫无察觉。而那些糟糕的调查——比如一份用英语询问“英语水平”的调查——才会让人印象深刻。(这可是真实发生的事。)

一个特别令人头疼的调查问题

优秀的调查(即有助于打造更好的产品并与用户建立更牢固的关系的调查)在以下五个关键方面展现出与普通调查的显著差异。它们能够:

- 增加参与和完成调查的人数

- 更轻松地从调查数据中获取见解

- 确保数据分析的有效性和准确性

- 推动基于调查结果所作的决策获得各方认可

- 改变人们对公司和品牌的看法

调查研究设计团队及 Stripe 的所有相关人员会遵循一些主要原则来打造出色的调查——这类调查深受用户喜爱、便于团队分析、公司乐于依据其结果采取行动,甚至有可能让世界变得更美好。

优秀调查背后的关键原则

原则 1:人们不是为调查而来的。

可以肯定地说,几乎 0% 的客户会主动访问您的网站去寻找在线调查。他们另有打算:他们想要购买商品、更新订阅、查看仪表盘,或者了解更多品牌信息。

如果您要在他们和他们想要完成的任务之间插入调查,那么就要尽可能让他们参与调查的体验轻松无负担。他们回答问题时不需要大费周章,而且要能迅速回到手头的任务中。遵循一些简单准则,即可让他们的调查体验顺畅无阻,提升调查参与度:

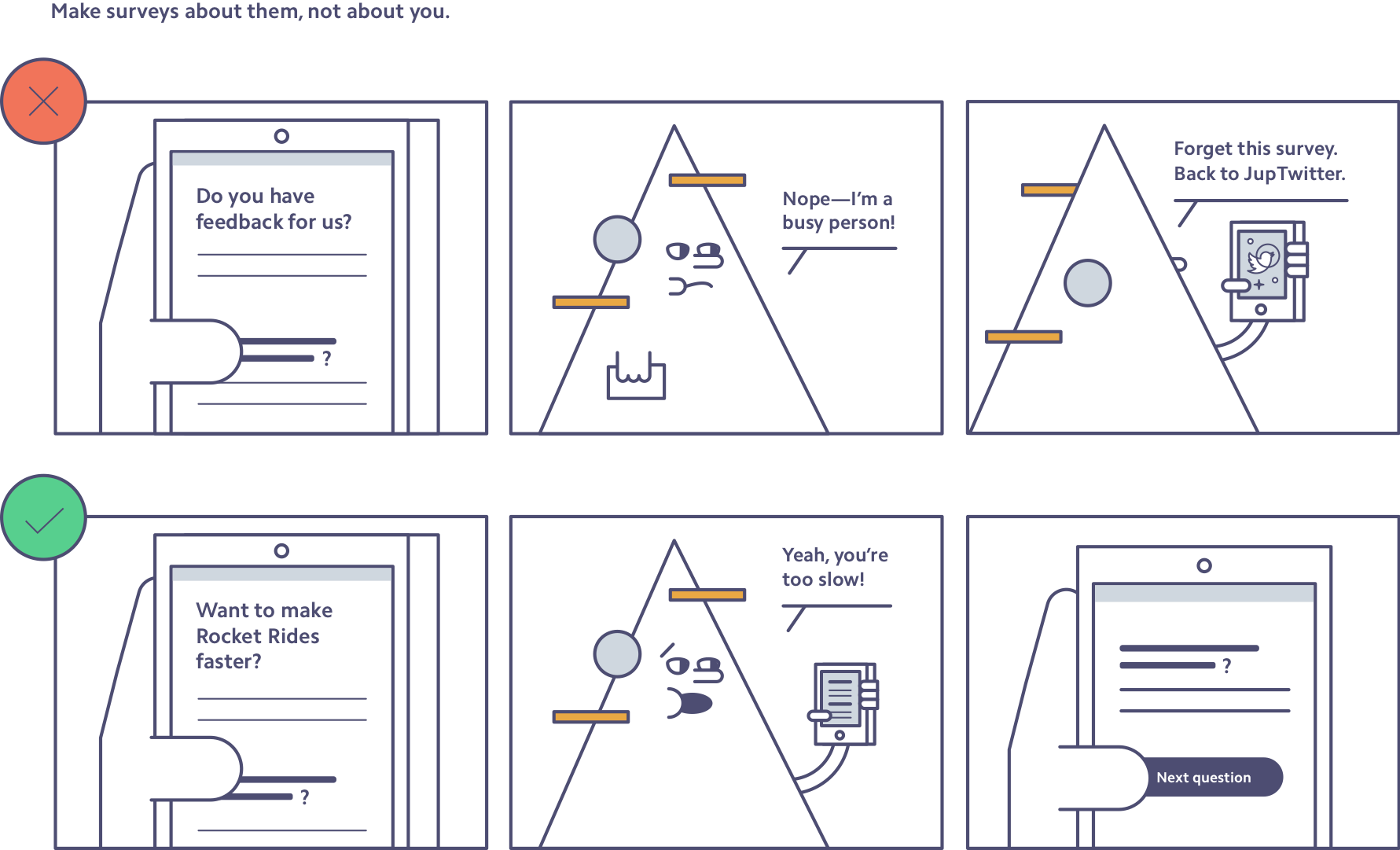

让调查关注他们,而非您。 在介绍您的调查时,要提醒人们参与调查能给他们带来什么好处。不要笼统地请求反馈,然后说“请参与这次调查来帮助我们”。要告诉他们,他们的回答将如何使他们受益,例如让页面更有用、消除漏洞、提供更好的服务等。

为了阐明这一点,我们假设您在星际旅行公司 Rocket Rides 工作。您的目标是为用户打造更好的体验,为此您需要了解用户的想法。但是,要让他们完整参与调查,而不中途退出转而去看 Twitter,调查内容对于人类(乃至太空外星人)而言就必须清晰且具有吸引力:

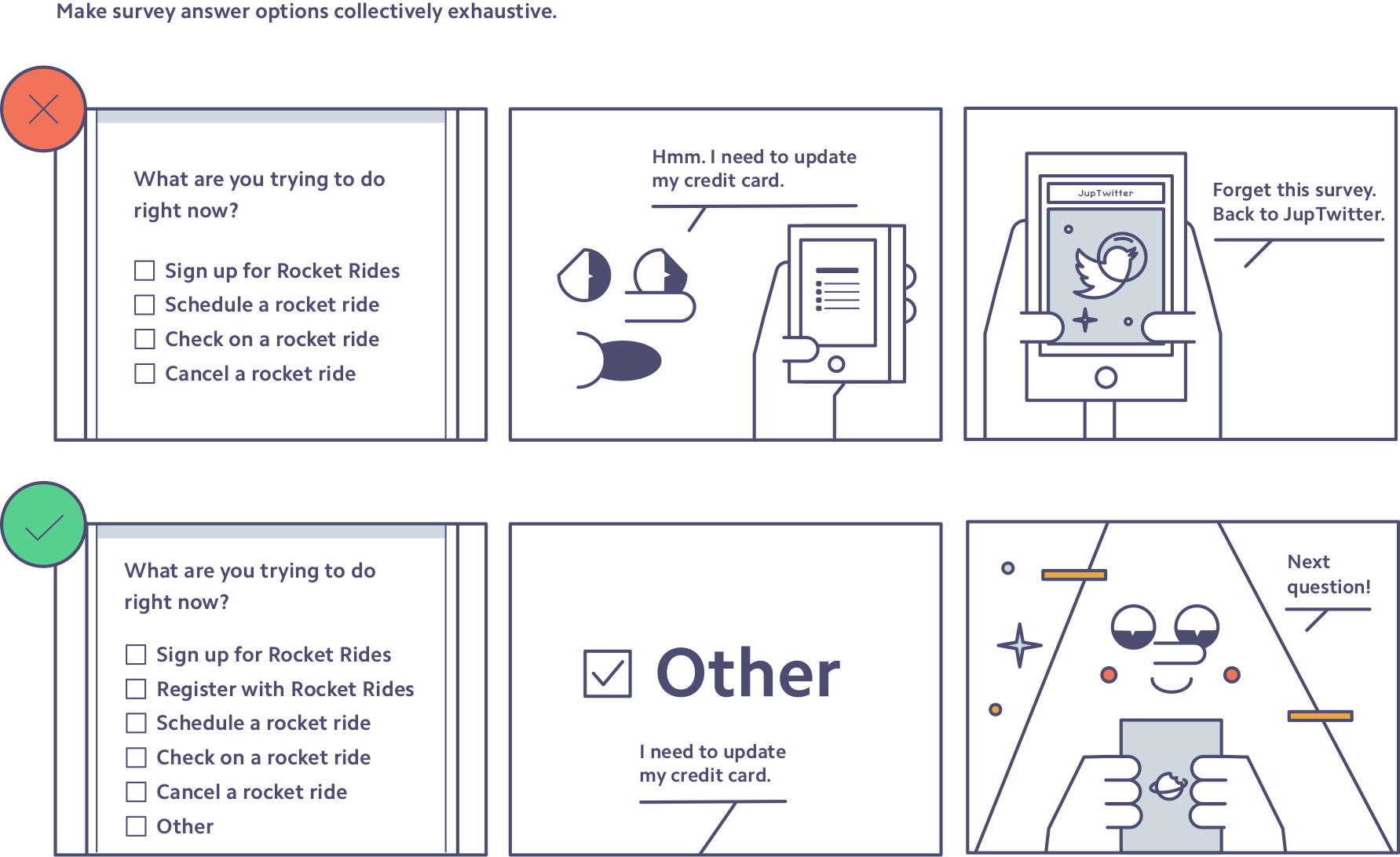

确保答案选项全面无遗漏。 每位调查对象都应能够对每个问题作出回答,这往往就要求在选项中设置一个“我不确定”以及一个“其他(请描述):”的选项。如此一来,不仅能为每位调查对象提供可供选择的点击项,还能有效筛除那些关联性较弱或不相关的答案。以下便是在 Rocket Rides 调查中运用这一策略的具体示例:

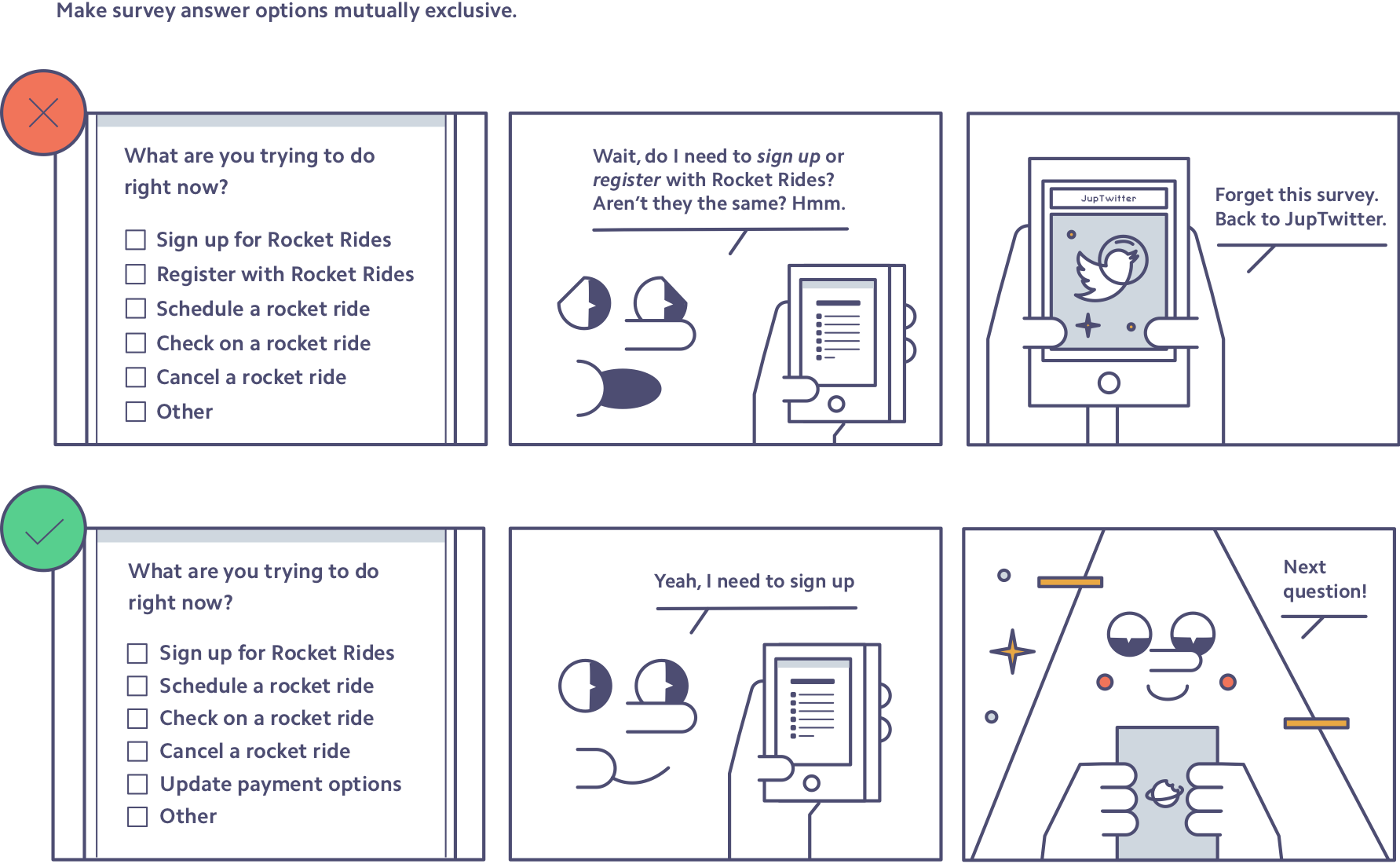

确保答案选项互不重叠。 当调查对象看到答案选项存在重叠时(例如,“1-5 次乘坐”与“5-10 次乘坐”),他们可能会因觉得需要思考的内容过多而放弃调查,或者给出不准确的回答。因此,必须确保所有答案选项之间互不重叠(例如,“1-5 次乘坐”与“6-10 次乘坐”),以避免造成混淆,进而导致调查对象流失。

让我们再次看看 Rocket Rides 如何改进其调查问题:

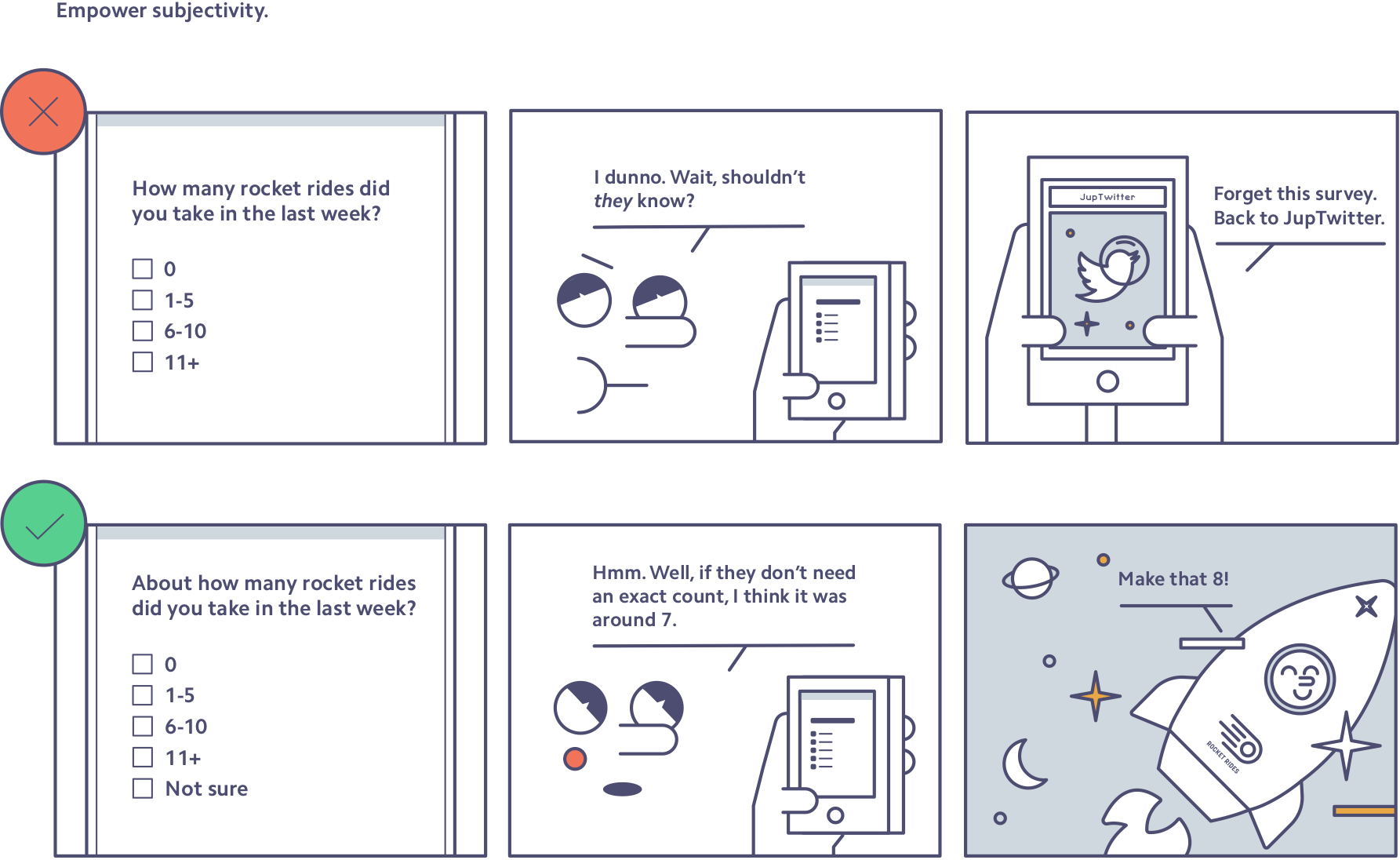

使用鼓励人们主观表达的措辞。 当要求人们回忆或估算信息时(例如,他们有多少客户,或者最近乘坐了多少次火箭旅行),不要让他们陷入对数据准确程度的纠结。相反,可使用“大致”“依你看”“一般来说”或“如果你必须猜测一下”等词语和短语,让人们能够给出主观的答案,进而顺利推进调查。具体操作如下:

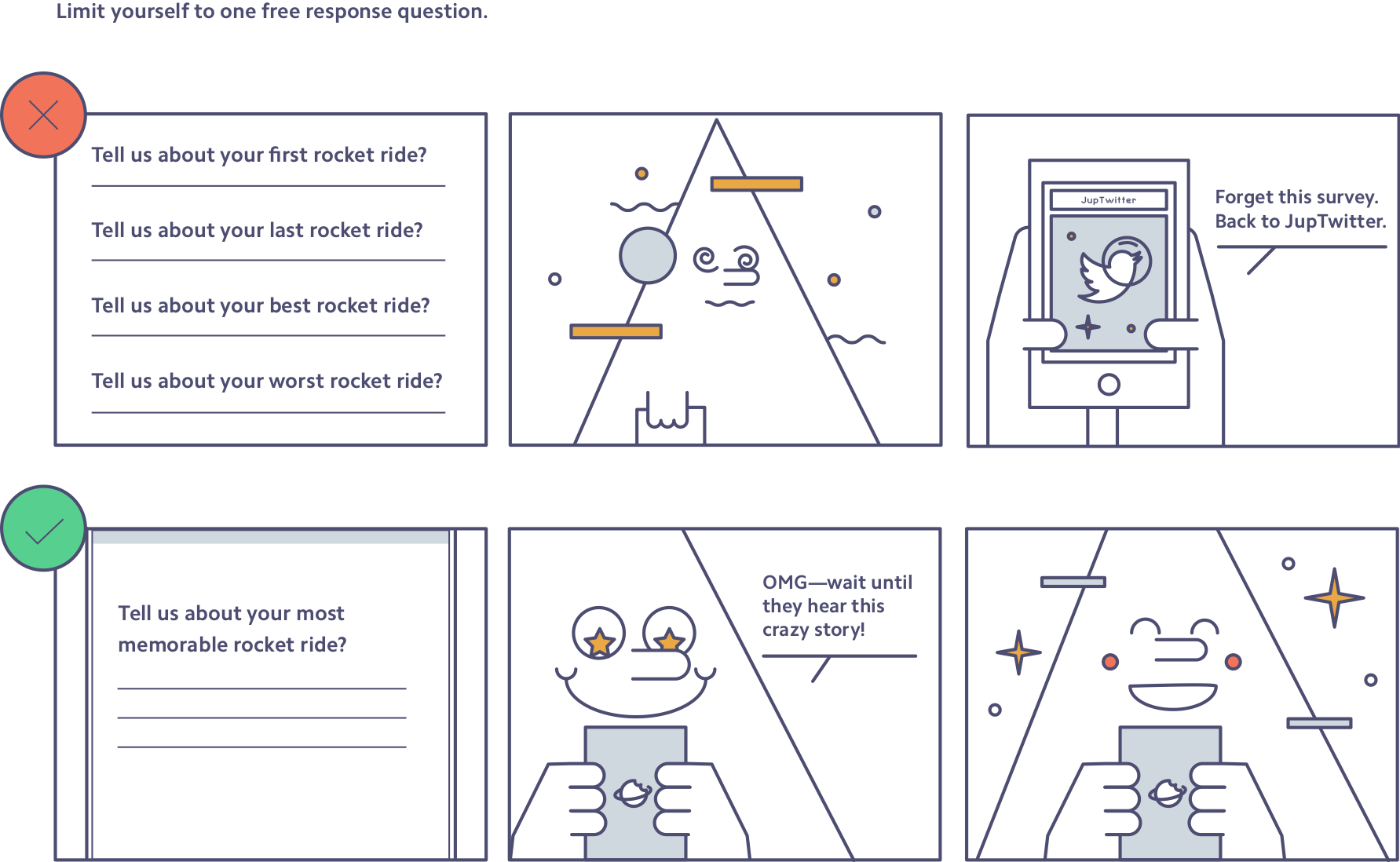

调查结尾处仅设置一个开放式问题。 多项选择题易于回答(尤其是在您遵循了前面的建议时),但输入实际文字到文本框中则需要耗费更多精力,尤其是在移动设备上。当调查对象遇到开放式问题时,他们更有可能放弃调查,因此最好将这些问题放在调查的末尾。待调查接近尾声时,您已借助恰当的问题与受访者建立了信任关系,让他们对调查主题有所了解,同时也为他们指明了您期望获取的信息方向。鉴于此,建议仅设置一个开放式问题;只有在极为特殊的情况下,才考虑设置两个。

以下是因过多开放式问题导致调查对象流失的示例:

总结: 在推广过程中,要说明完成调查将如何使调查对象受益,而不是说明它将如何帮助公司。运用“互不重叠、全面覆盖”(MECE) 原则来检验所有调查问题的答案选项。让调查对象能够主观表达,接受大致数字——近似答案总比未完成的调查要好。只设置一个开放式问题,并将其置于调查的最后,以提高调查完成率。

原则 2:调查是品牌化的内容。

当人们收到贵公司的电子邮件时,他们不会去想具体是哪位员工编写了这份调查,而是将这份调查视为公司整体发出的沟通信息。一份调查问卷会直接向用户传达公司的优先级安排、对细节的关注,以及对用户时间的尊重。在创建调查时牢记以下建议,打造一个尊重用户时间、展现品牌最佳形象的调查体验:

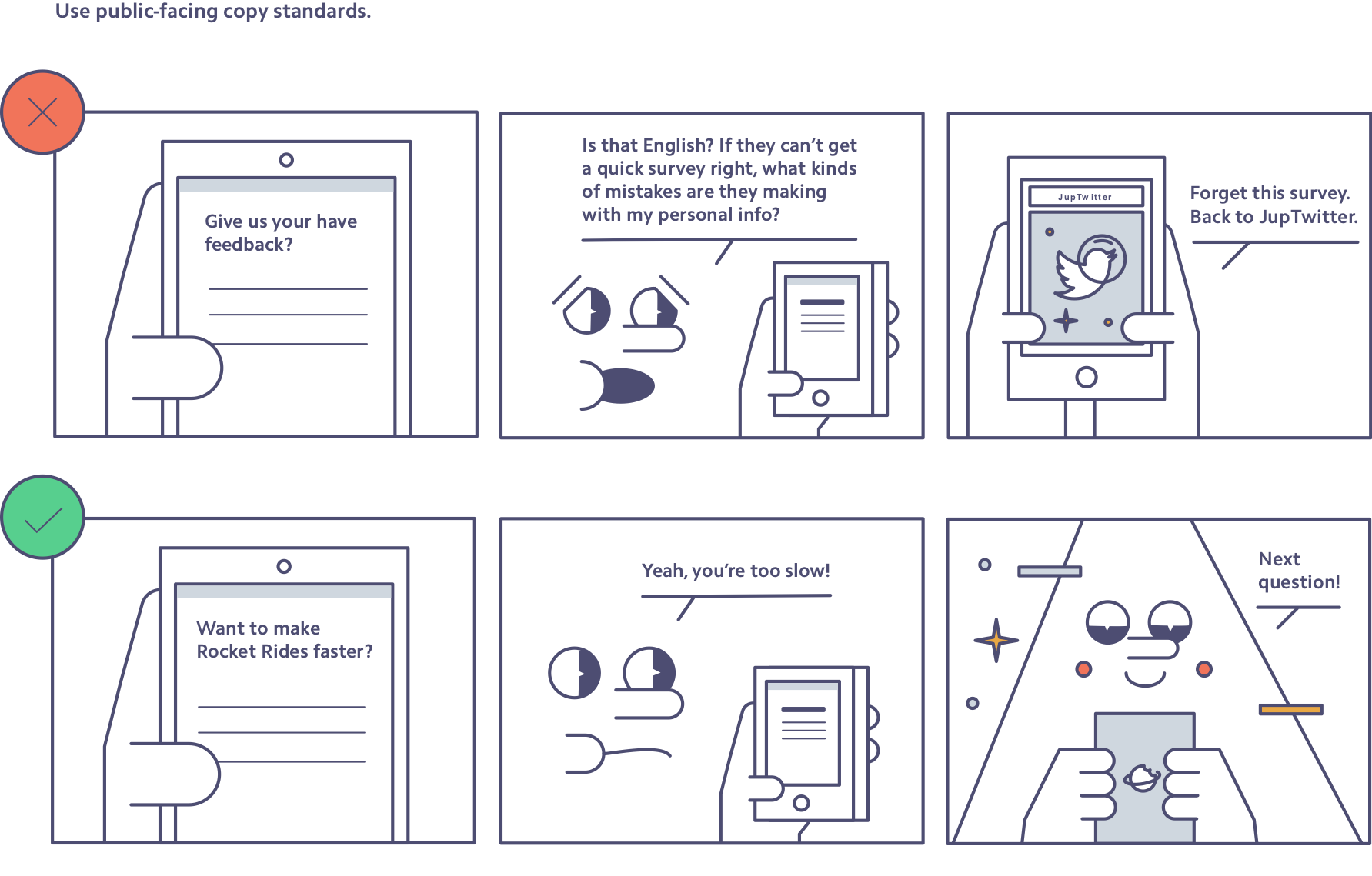

采用面向公众的文案标准。 调查问卷可能会通过专属链接私下发送,但它们仍是面向公众的内容,反映了公司的标准。调查在标点、语法、拼写、内容清晰度和语气方面应做到无可挑剔。通常,确保这一点的唯一方法是请他人再检查一遍。因此,在将调查模板发送给用户之前,务必请他人审核。Stripe 在正式发送调查前会先与团队成员分享,以帮助发现拼写错误和表意不明的句子。

以下讲述的便是糟糕的语法或拼写错误是如何让调查对象陷入困扰的:

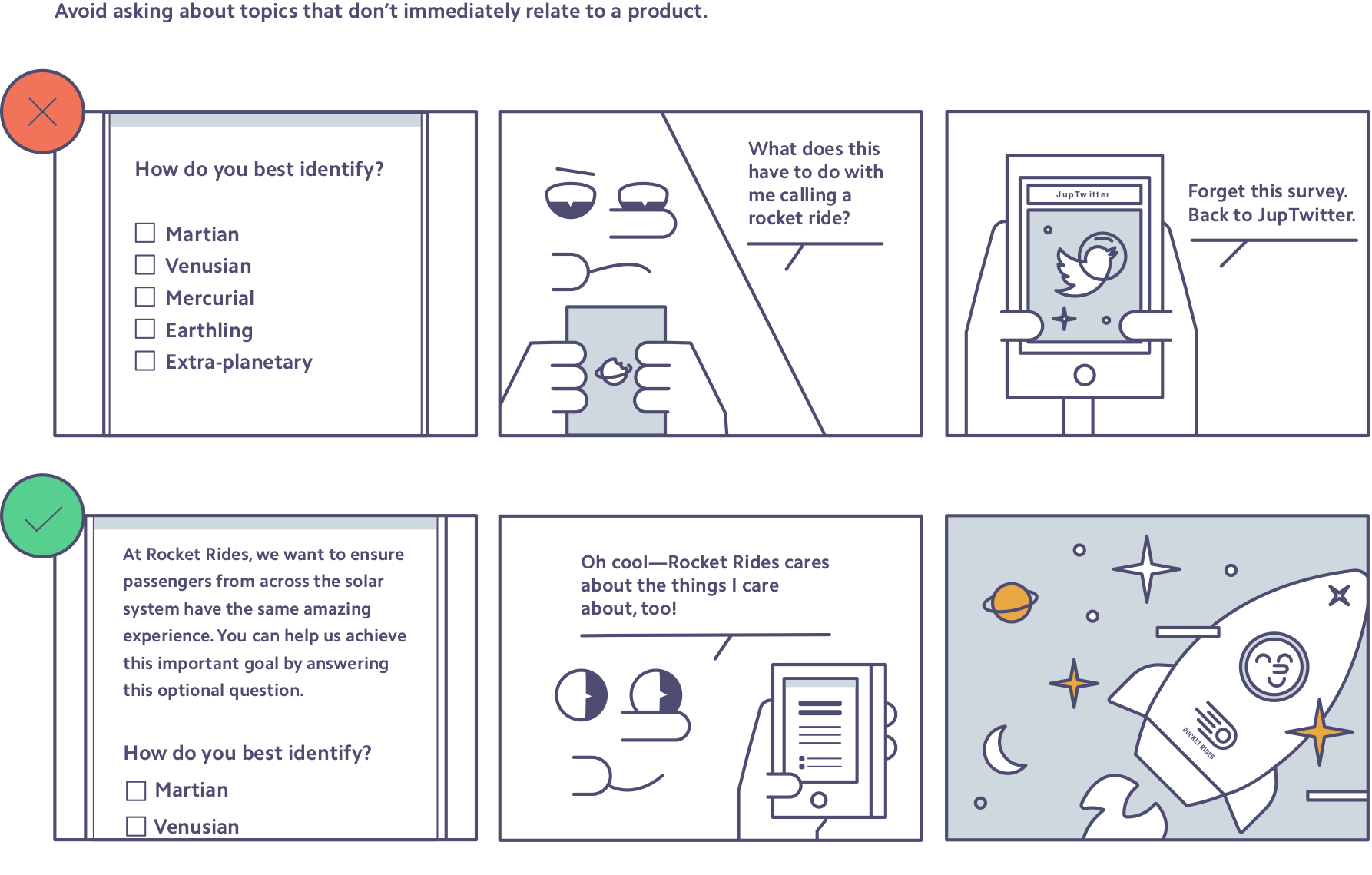

避免询问与产品无直接关系的话题。 这里有一个简单的经验法则:如果您不会在公交车上随意询问他人此类问题,那就同样不要在调查中涉及。在调查的形式里,那些可能较为敏感、具有煽动性,且与您的产品组合没有直接关联的主题(如政治、宗教、性取向、健康状态、家庭及教育背景等)处理起来尤为棘手。固然存在一些较为周全的方法来询问这类问题,但必须格外审慎。最为关键的是,您要确保调查对象明白您为何要询问他们这些私人问题,并将其与他们所关切的事项清晰关联起来。一般而言,除非这些话题对您的业务成功至关重要(并且一定要事先咨询专家,比如公关人员),否则应尽量避免在调查中包含这些话题。

以下是 Rocket Rides 针对人口统计特征问题,可能会采用的提问方式示例:

尊重用户的时间。 调查中仅纳入那些能够切实获取您所需要信息的提问。您可以试着问自己这样几个问题:“这个问题是否尊重了人们的时间?它是否反映了我们公司的核心重点事项?”如果调查时间过长,那就对公司重点事项进行优先级排序,只选择那些能反映前几项重点的提问。

用全新且多元的视角测试调查。 无论您的调查是给人留下的第一印象,还是又一个接触点,都关乎着您的品牌声誉。您可以自行检查调查的文案、内容和长度,然后至少再找一个人来审核一遍。理想情况下,审核者不仅能就调查的语气和语调提供反馈,还能发现难以理解的语言或术语。邀请来自公司其他部门的人员担任审核者是上佳之选,因为他们能够提供全新的视角。

总结: 让您的调查展现出品牌的最佳面貌。这意味着调查文案要经得起公众审视,不涉及无关的敏感话题,只呈现重点事项,尊重用户的时间。养成让另外一人(可以是您沟通团队的成员,毕竟调查属于品牌内容范畴)对调查进行审核的习惯。

原则 3:在调查问题中始终明确并拆分概念。

您的用户花在思考产品上的时间很可能比您少得多。我们经常想当然地认为调查对象明白我们的意思,但实际上他们并不明白,所以不要一开始就假设用户会明白。以下是如何与调查对象达成共识并保持一致的方法:

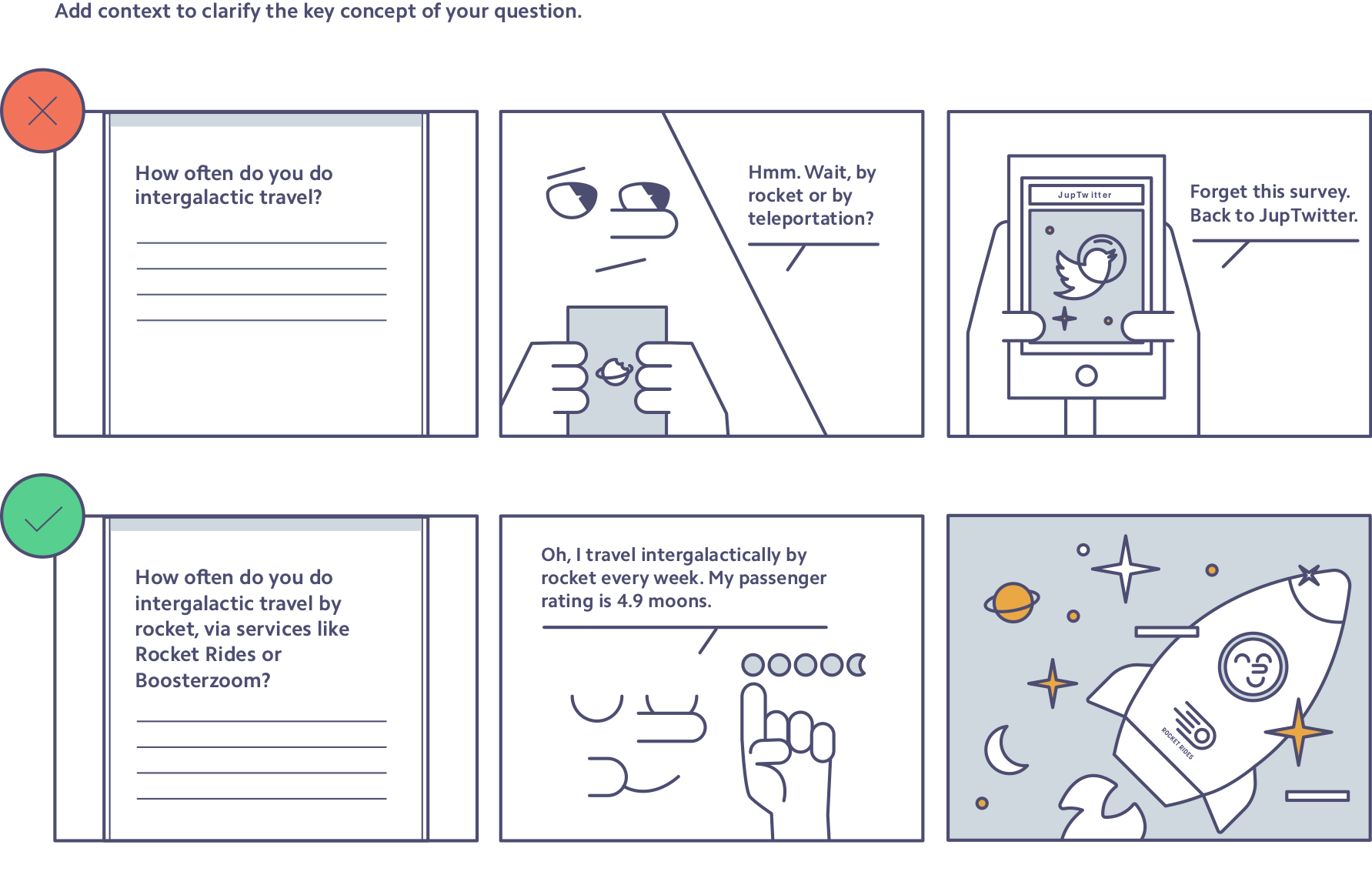

详细描述问题的关键概念。 每个调查问题都应提及一个调查对象能够识别且清晰的主题。当关键概念是产品名称或专业术语时,务必要谨慎。可以使用多个描述词来阐明您的意思。例如,当 Stripe 发出一项调查时,我们不能仅仅询问“线上付款”的情况。我们应提供更多背景信息,以确保调查对象理解问题:“您(通过 Stripe、PayPal、Adyen、Braintree 或 Square 等公司)使用线上付款大概有多长时间了?”

下面是一个例子,说明如何添加背景信息,使问题更准确、更容易回答:

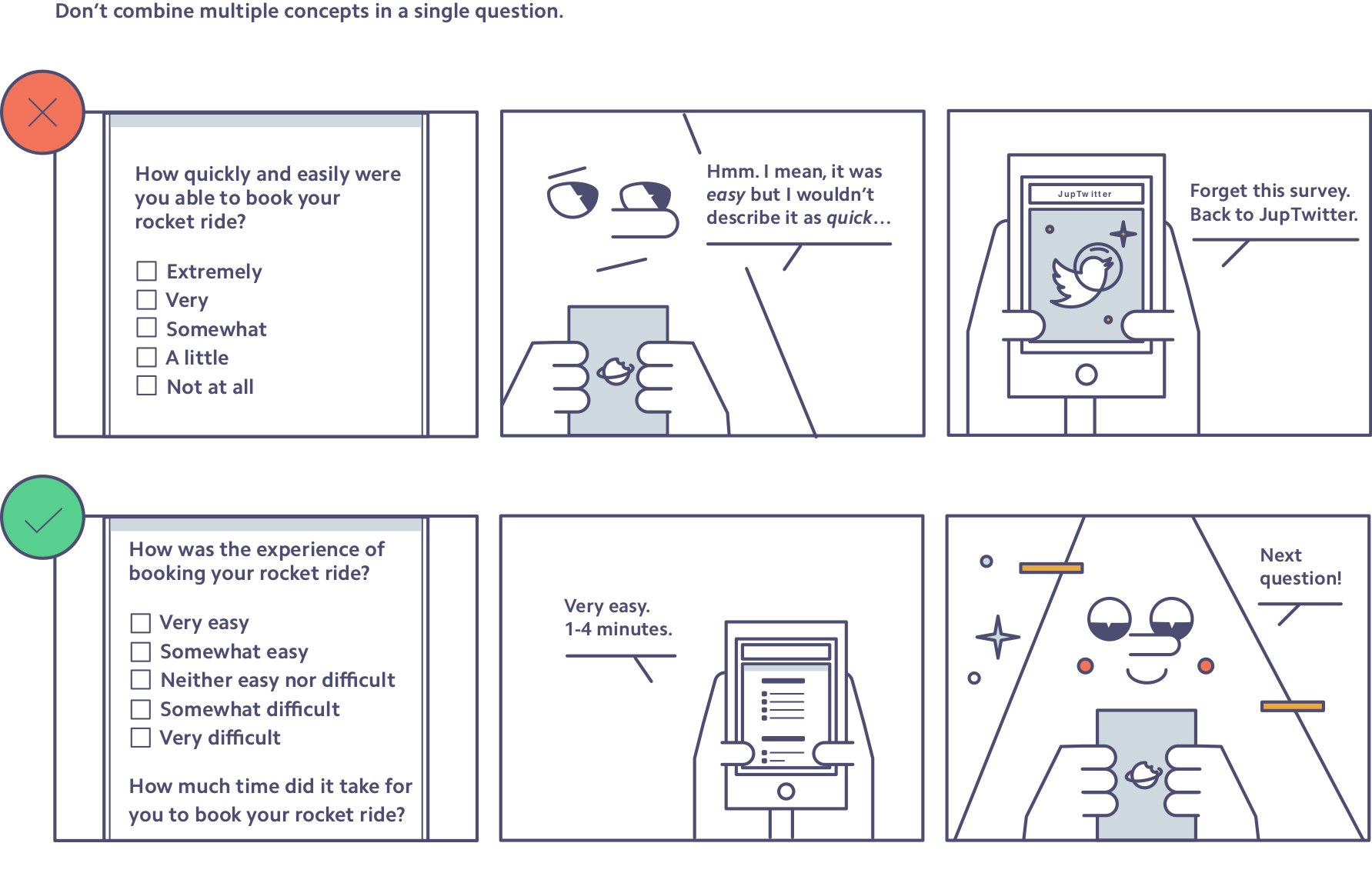

不要在一个问题中涉及多个概念。 人们可能会忍不住想在一个问题中涵盖客户体验的多个方面。比如说,您想了解客户的体验是否“快捷又方便”。那么,询问“您的体验有多快捷方便?”这样的问题,不仅属于引导性问题(详见下文原则 4),而且可能让调查对象无从回答。试想,如果一个人的体验非常快捷但一点也不方便,她该如何回答呢?再举一个例子:如果您想评估一份指南是否易于理解,不要问“这份指南信息量有多大,有多容易理解?”相反,您应该将这个问题拆分成两个概念:“您从这份指南中学到了多少?”以及“这份指南的表述是否清晰?”通过避免在一个问题中堆砌过多概念,就能让用户拥有更优质的调查体验,同时使收集到的数据更易于分析理解。

以下是 Rocket Rides 公司在一次调查中运用这一策略的实例:

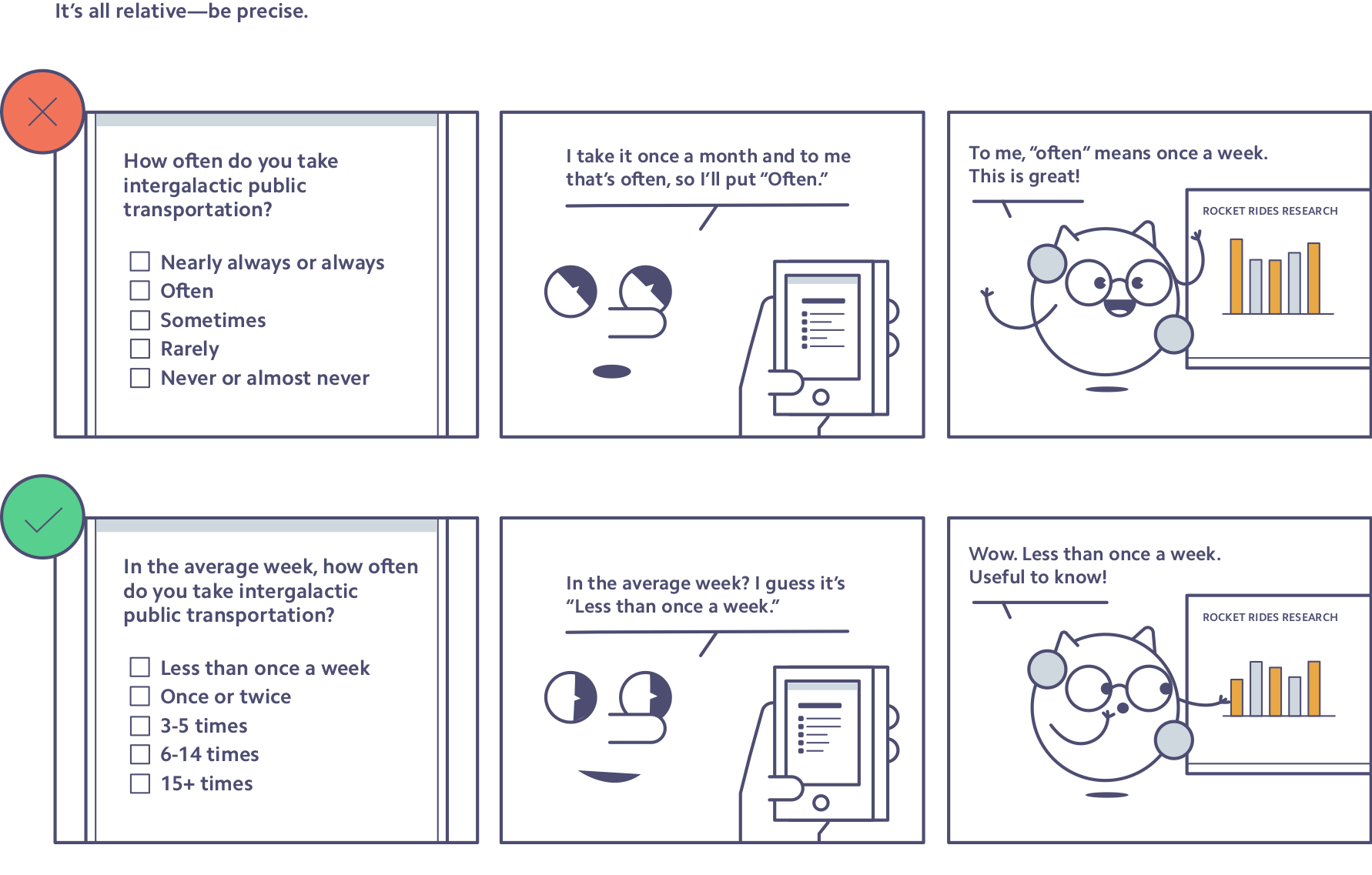

一切皆具相对性——务必精准。 相对性表述很容易在不经意间导致数据收集不良。您所说的“经常”和用户所说的“经常”可能大相径庭。您可能觉得是“每天多次”,而用户想到的可能是“每周一次”。回答问题时要非常具体,这样您才知道接下来该采取什么步骤。

以下是 Rocket Rides 可能改进调查问题的方式,以及它能收集到的数据:

总结: 大多数问题都是因为复杂和含糊不清。容易出现的陷阱有:产品名称或技术术语、非此即彼(同意或不同意)的提问方式、像“经常”或“有时”这类可作多种解读的词汇,或是像“快速简便”这类复合描述。因此,在有疑问时,要质疑假设并详细说明。通过提供背景信息、采用清晰的措辞或拆分概念,可以避开许多陷阱。

原则 4:削弱迎合倾向。

科学研究表明,人们倾向于说出自己认为您想要听的内容。这就会带来问题,因为您需要坦率的反馈才能打造出最佳产品。以下建议将帮助您的用户在产品出现问题时坦诚相告:

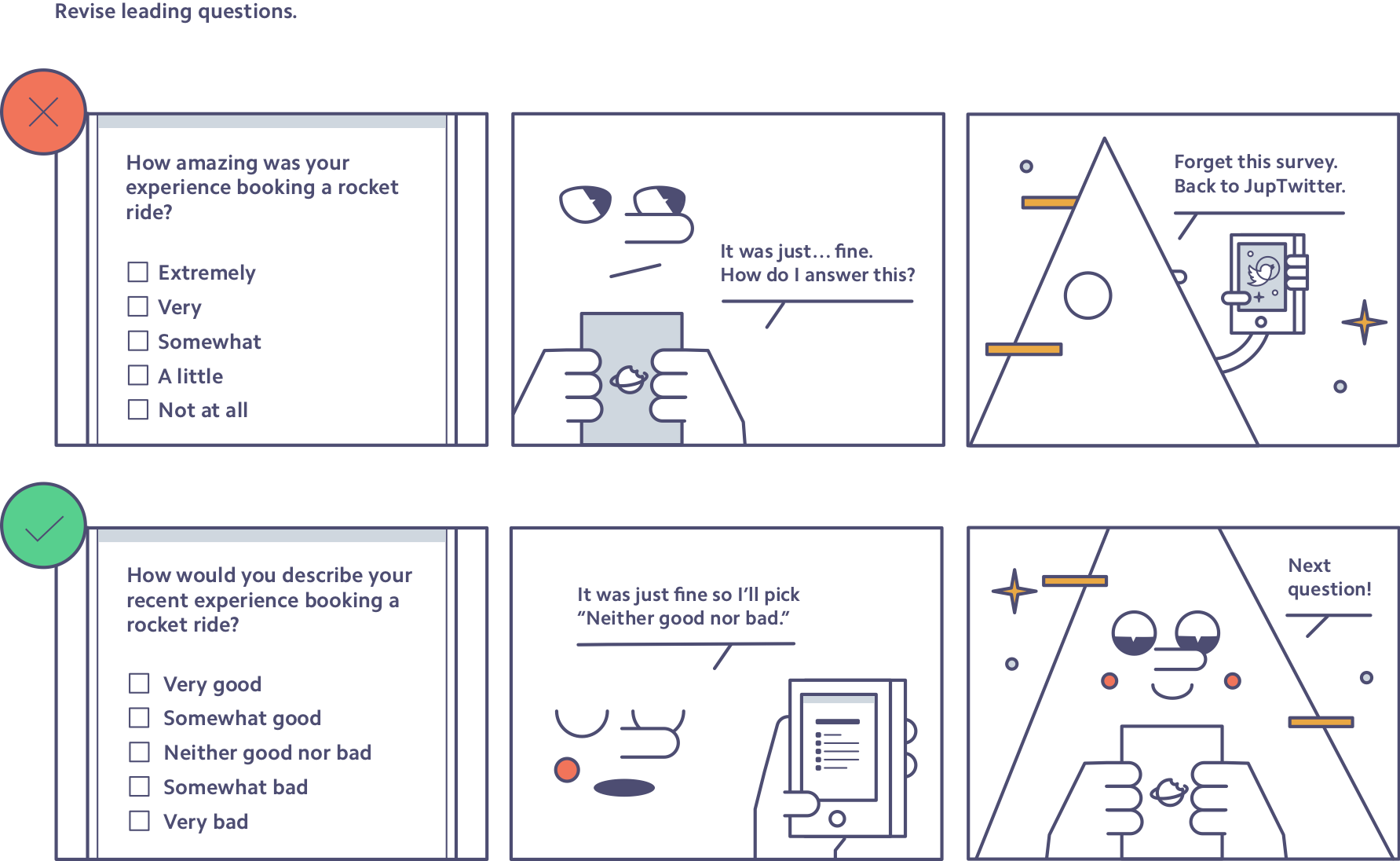

修改引导性问题。 当向调查对象询问对某事物的积极程度(或有用程度、好坏程度、准确程度)时,您其实是在潜移默化地引导他们朝积极方向思考,进而促使他们给出更积极的回应。在国际环境中,这种情况会变得更为复杂,因为调查研究显示,不同地区的人回答调查问题时的方式各不相同。比如,在法国、希腊和印度等一些国家,人们较少选择中立(或中间)选项,而更倾向于选择极端选项;但在北欧等其他地区,人们一般给出积极回应的可能性较低。这就意味着,直接比较两个国家的调查结果可能会困难重重。如果能以非引导性的方式精心设计问题,则有助于消除不同市场间的这些差异,而且,无论在何种情况下,这都是一种值得推崇的最佳做法。

以下是一份问题清单,它清晰展现了从具有明显引导倾向的问题,到中立无引导性问题的完整范围:

- 这项调查有多优秀?

- 您有多喜欢这项调查?

- 您推荐这项调查的频率如何?

- 您会推荐这项调查吗?

- 您如何评价这次调查?

以下是 Rocket Rides 可能会对一个具有引导性的问题进行的重新表述:

避免采用同意-不同意量表。 采用同意-不同意量表(其选项通常涵盖从“非常同意”到“非常不同意”)会导致默许偏差,也就是人们会更多地给出肯定或同意的答案。虽然在某些情况下,同意-不同意量表效果不错,通常是在问题极其简单明了的情况下,但在 95% 的情况下,针对具体问题的回答更为理想。针对具体问题进行回答意味着,调查答案与调查问题紧密对应。因此,您不是对某个陈述表示同意或不同意,而是分享您对某件具体事情的感受程度,或者您做某件事的频率。

以下是 Rocket Rides 公司对一个采用同意-不同意量表的问题进行改写的示例:

要允许人们表达不同意见。 从调查伊始,就应通过介绍页面或段落明确告知用户,您渴望得到他们的真实反馈,以此营造一种乐于接纳反馈、积极学习的氛围。研究显示,在调查开始阶段就请求用户给出真实反馈,能够激励他们给出更加深思熟虑的回答。此外,您还可以考虑设置开放式问题框来征集用户的反馈意见——即便他们已经表示对您的产品或体验感到满意。这是另一种展现您真诚希望听取改进建议的方式。同时,这样还能帮助您收集到那些可能受到默许偏差影响的用户的真实反馈。

总结: 鼓励用户对您坦诚直言、不吝赐教。在调查开始时,就明确告知调查对象您期待他们直言不讳,并在调查结束时为他们留下反馈的渠道。删除或修改任何可能具有引导性的问题。同时,要注意避免使用过于积极的措辞或同意-不同意量表来影响调查对象的判断。

在线调查被低估的作用

调查就像一面冷峻的镜子,清晰反映出发送调查问卷的公司的真实目的。人们或许会欣然接受与品牌互动、支持品牌并助力其改进的机会,进而参与调查。然而,调查对象也可能因分心、缺乏兴趣或心存疑虑而拒绝参与。无论最初的反应如何,任务始终不变:即获取反馈,改进产品,从而更好地服务大众。

当调查问卷这面镜子更多地映照出调查对象的状况,而非公司的形象时,调查便取得了实质性的进展。调查对象在调查中受到的关注度越高,调查效果就越好。做到以下几点便会出现这种转变:

- 让参与调查变得轻松无碍,确保答案选项互不重叠、全面涵盖各种情况,巧妙设计开放式问题。

- 将调查视为品牌内容来设计、起草与审核。

- 在调查问题中提供背景信息,明确模糊的术语,并拆分复杂概念。

- 积极允许调查对象表达不同意见,并分享真诚反馈。

Stripe 自创立伊始便对用户展开调查,但目前仍处于早期探索阶段。我们的调查研究团队不断优化方法,为员工提供起草和部署调查问卷的工具,同时确保用户能够愉快地完成调查。我们期望达成的目标是实现一种平衡:既要通过调查问卷展现出 Stripe 直截了当又亲切友善的风格,又要让参与过程无比顺畅,使调查对象乐于分享反馈,并持续支持其业务。

这一平衡虽具挑战性,但所收获的回报却是值得的。

告诉我们您是否尝试过或计划尝试这些策略,或者您是否对调查设计的最佳实践有独到见解。我们期待您的真知灼见。请发送邮件至 research@stripe.com。或者,如果您有意与我们合作,在 Stripe 开展研究工作,请在此处提交申请。