评估欺诈风险是一个持续的过程,需要识别攻击途径、欺诈形式和情境,并采取相应的缓解措施。在与用户紧密合作的过程中,我们的专业服务团队观察到,在这方面表现最为出色的商家往往能让欺诈分析师和数据分析师携手合作——他们结合使用 Stripe Radar 风控团队版和 Stripe Data 产品(如 Stripe Sigma 或 Stripe Data Pipeline)来识别常见的欺诈形式和特定于其业务的欺诈形式。

Radar 风控团队版有助于检测和预防欺诈,并使您能够通过自定义规则、报告和人工审核,为您的业务创建独一无二的定制欺诈设置。Stripe Sigma 是一种报告解决方案,可在 Stripe 管理平台的互动环境中提供所有交易、转换和客户数据。Data Pipeline 则提供相同的数据,但会自动将其发送到您的 Snowflake 或 Redshift 数据仓库,以便与其他商家数据一起访问。

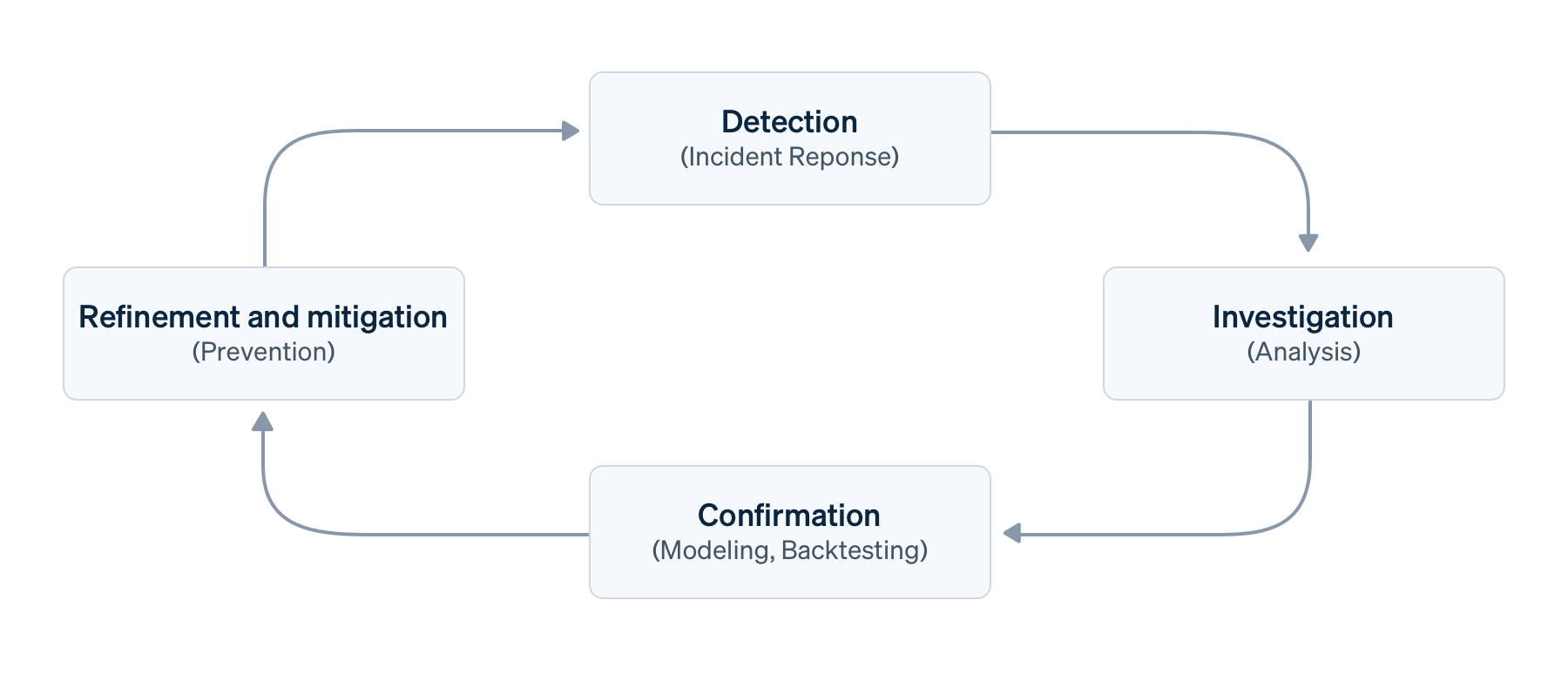

这些工具无缝协作,共同支撑起有效欺诈管理流程的四大支柱:检测、调查、确认以及细化和缓解——我们将对这些方面进行更深入的探讨。

使用 Radar 风控团队版和 Stripe Sigma 或 Data Pipeline 的价值

将 Radar 风控团队版与 Stripe Sigma 或 Data Pipeline 结合使用的主要目的是将 Radar 的数据(如元数据)与您自己的数据(包括预授权、用户旅程、转换和会话信息)一起分析,以便将合法交易与欺诈性客户行为区分开来。这样,您就可以优化以下方面:

- 洞察时效性 主动发现和预防欺诈

- 响应时间 制定预防性和检测性规则

- 欺诈成本 包括退款、争议费用、客户流失和发卡行拒付

我们的在线欺诈现状报告强调了人工审核流程的运营开支,并指出“[公司]规模越大,其审核的交易占比反而越少”。通过将这些流程自动化,您可以将欺诈分析师解放出来,将更多的时间用于识别新的攻击途径以及制定预防性和检测性规则上。这意味着您可以在拦截欺诈流量和降低合法客户摩擦度(减少客户流失)之间取得更好的平衡。

基础交易欺诈管理流程

假设您在更广泛的风险管理框架内实施了一个基础交易欺诈管理流程,包含检测、调查、确认以及细化和缓解四个阶段。

- 检测,也称为识别、预测或事件响应,是指发现需要进一步调查的数据点。检测可以是手动的(如在事件发生期间)、半自动的(通过检测规则或根据基线进行监控)、自动的(通过机器学习或异常检测)或由外部触发的(如客户反馈或争议)。在检测方面,Radar 的机器学习可以自动发现常见的欺诈形式,而 Stripe Sigma 则可以帮助您发现您的业务特有的欺诈形式。

- 调查,或探索性分析,是指对可疑支付或行为的评估,以更好地了解对商家的影响;这通常需要结合更广泛的数据进行验证,以排除干扰。通常,欺诈分析师会使用 Radar 审查队列 或 Stripe 的支付管理平台进行调查。

- 确认,也称为建模或回测,是将经过验证的欺诈攻击途径归纳为维度和候选模型。这还包括使用历史数据及其他规则进行验证和影响评估。Radar 的回测和模拟功能可以帮助欺诈分析师完成这项工作,但数据工程师可以使用 Stripe Sigma 来应对更广泛的情境。

- 细化和缓解,有时也称为行动或限制,是指模型的实现——将维度和重要特征映射到 Radar 规则条件中,以预防、监控或重定向欺诈。传统上,这些规则是静态的预防性规则,但现在机器学习已成为人类的重要辅助工具,且目标是提高精准度,因此“细化”是更合适的术语。这通常包括在 Radar 中设置拦截规则或列表。

这一流程的基本实施可能涉及迭代周期,如每日检查、冲刺或发布基于规则的欺诈检测设置。不过,考虑到周期时间可能不同,且反馈循环也可能同时发生,我们更倾向于将其视为一个持续的流程。

接下来,我们将结合一个假设情境,逐一详细介绍这四大支柱,并向您展示 Radar 风控团队版和 Stripe Sigma 或 Data Pipeline 可以提供哪些帮助。

我们的情境

在我们假设的情境中,我们将分析一段时间内发生的行为,而不是突然激增的行为。

假设您是一家电商商家,在可观测性工具套件中设置了 Webhook 监控功能,可实时绘制各种支付趋势图表。您注意到,在过去几天里,一个名为“Mallory”的银行卡品牌的拒付量持续上升,而该产品通常并不在该银行卡品牌常用的地区销售。(注:“Mallory”是一个虚构的银行卡品牌,我们使用它是为了使这一情境更加生动;例如,它可以不是 Enhanced Issuer Network 上的品牌。)此外,并无促销活动或其他事件能解释这一变化,因此您的团队需要调查究竟发生了什么,并决定下一步行动方案。

检测

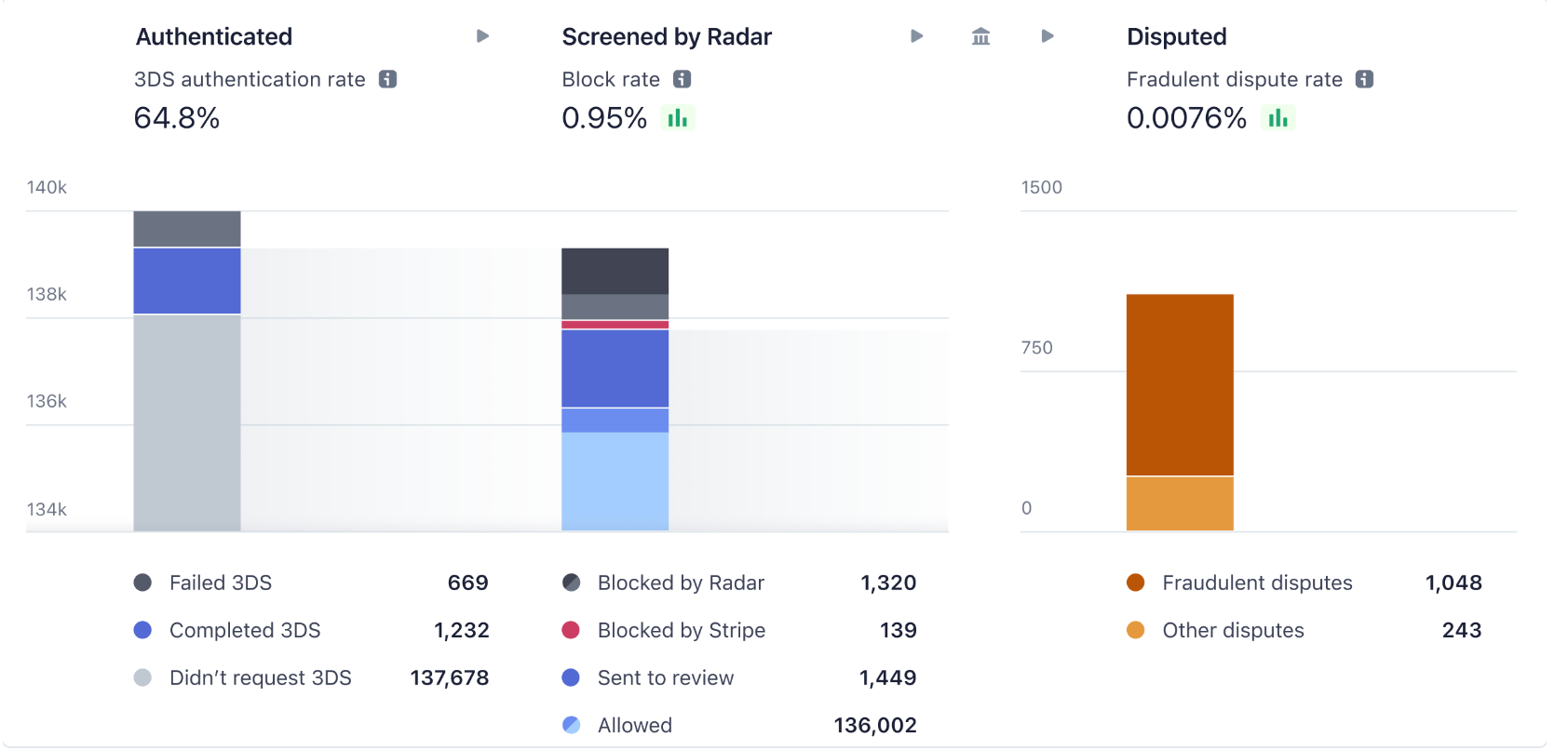

Stripe 的默认规则是利用机器学习来预测、检测和拦截大量欺诈性支付。Radar 分析管理平台可以让您快速概览欺诈趋势。对于需要更精细控制支付审核、放行或拦截流程的商家而言,规则是定制化欺诈保护的得力工具。

在检测新型欺诈形式前,您需要先建立预测信号基准线,例如现有规则的表现。换句话说,您需要了解自身业务的“正常”状态,或“良好”交易的特征。这需要欺诈分析师与数据工程师协作完成(他们也可能与 DevOps 团队及其可观测性工具套件合作)。在我们的假设情境中,通过持续监控,系统检测到“Mallory”银行卡类型被拒交易量出现增长。

包含相关欺诈数据的 Stripe Sigma 表格

要检测新兴欺诈形式,首先要利用发卡行拒付/授权率和 Radar 拦截率等特性建立基准性能指标。接下来,您需要查询欺诈性争议、早期欺诈预警(发卡行欺诈记录),以及具有高速度、高发卡行拒付率或高 Radar 风险得分特征的支付交易。理想情况下,您可以根据可用数据设置每日自动运行此查询,并利用过去的数据(包括每周和每月的切分数据)实现所有管理平台的可视化,甚至无需手动查询 Stripe Sigma 或数据仓库。这将显著缩短事件响应时间。

以下是最相关的表格:

|

Stripe Sigma/Data Pipeline 表名称

|

描述

|

|---|---|

|

Payments(付款)中的 Charge(收款)对象(验证后的原始收款,非 Payment Intents)

|

|

|

部分支付系统发送的发卡行欺诈记录(注意它们不一定是早期预警且未必转为争议)

|

|

|

包含语法规则的实际 Radar 规则(特别是规则决策)

|

|

| 客户对象——对于去重和地址信息非常重要(例如持卡人姓名和邮编) | |

| 使用 3DS 验证增加验证步骤时的验证尝试 | |

| 追踪 Radar 对交易采取的最终措施 | |

| (新增)追踪评估每笔交易后的实际规则值 |

您的欺诈或业务分析师应基于业务领域,明确需要评估的其他关键分类维度。Radar 风控团队版中的 Radar 规则属性表中包含 Radar 评估的现有交易的详细信息,但数据追溯仅至 2023 年 4 月,且不包含元数据和最终结果。早期阶段,您需查询以下字段:

|

细分维度

|

Radar 规则属性表中的字段

|

数据架构字段

|

|---|---|---|

| 卡品牌、资金或工具类型 | card_brand | |

| 钱包用途 | digital_wallet |

charges.card_tokenization_method

|

| 客户或银行卡所属国家/地区 | card_country |

charges.card_country

|

| 银行卡指纹(用于重复使用) | card_fingerprint |

charges.card_fingerprint

|

| 金额(单笔或累计) | amount_in_usd |

charges.amount

|

| 每笔交易的 CVC、邮编 (AVS) 核验 | cvc_check address_zip_check | |

| 账单与配送客户数据(尤其是邮编与持卡人姓名) | shipping_address_postal_code billing_address_postal_code 及类似字段 |

customer.address_postal_code 及类似字段

|

| 产品细分 | 不适用 | |

| Radar 风险评分 | risk_score |

charges.outcome_risk_score

|

| 交易结果 | 不适用 |

charges.outcome_network_status

|

| 拒绝原因 | 不适用 |

charges.outcome_reason

|

| 客户(个人、群体或按账户年龄、国家/地区进行的细分,收货地址和账单地址分开) | 客户 |

payment_intents.customer_id

|

| 平台的 Connect 子账户(个人、群体或按账户年龄、国家/地区进行的细分) | 收款账户 |

新的Radar 规则属性表会针对Radar评估的每笔交易,追踪相同维度及更多指标,并完整呈现Radar属性的名称。例如,您可以追踪速度趋势,如 name_count_for_card_weekly。

虽然趋势可视化的方法有很多种,但针对不同细分项生成简单的透视折线图,是便捷对比其他影响因素的有效方法。在“调查”阶段深入分析时,您可能需要组合多个细分项。以下示例表格展示了“Mallory”银行卡类型被拒交易量增长的产品细分情况:

|

day_utc_iso

|

product_segment

|

charge_volume

|

dispute_percent_30d_rolling

|

raw_high_risk_percent

|

|---|---|---|---|---|

| 2022/8/25 | gift_cards | 521 | 0.05% | 0.22% |

| 2022/8/25 | 文具 | 209 | 0.03% | 0.12% |

| 2022/8/26 | gift_cards | 768 | 0.04% | 0.34% |

| 2022/8/26 | 文具 | 156 | 0.02% | 0.14% |

| 2022/8/27 | gift_cards | 5,701 | 0.12% | 0.62% |

| 2022/8/27 | 文具 | 297 | 0.03% | 0.1% |

| 2022/8/28 | gift_cards | 6,153 | 0.25% | 0.84% |

| 2022/8/28 | 文具 | 231 | 0.02% | 0.13% |

您可以在所选电子表格工具中按如下方式可视化展示数据:

让我们更详细地看看一个 Stripe Sigma 或 Data Pipeline 查询以返回基准数据的示例。在下面的查询中,您将看到每日争议率、拒付率、拦截率等作为独立列。在“检测”与“调查”阶段,以独立列展示的宽格式、稀疏数据往往更易于可视化。此外,这也有助于后续将列映射到 Radar 条件上。但在“调查”阶段的探索性分析中,或“确认”、“细化和缓解”阶段的机器学习中,您的数据科学家可能更倾向于使用长格式、密集型数据。

在此示例中,我们纳入了支付相关的元数据,以按产品细分展示具体数据。按照我们的情境设定,若采用宽表策略,则需对银行卡品牌 (“Mallory”) 和资金类型进行类似的查询。此处我们对重试请求进行了去重,以聚焦实际支付意图,并更准确地把握数据规模。我们选择对支付意图进行去重——更深入的集成可能会发送一个字段(例如,元数据中的订单ID),以确保在整个用户操作流程中实现去重。此示例展示了如何通过引入另一个因素来提高反欺诈措施的精确性。在我们假设的情境中,这个因素是产品细分“礼品卡”。我们将在后面的“细化和缓解”部分进一步探讨提高精确性的方法。

请注意,为了便于阅读,我们简化了本指南中使用的查询。例如,我们未独立考虑前置或滞后指标,如 3DS 验证失败、争议或早期欺诈预警的创建时间。此外,我们也未纳入全转化漏斗中的客户生命周期数据及其他元数据,如信誉或风险得分。另外请注意,Stripe Sigma 和 Data Pipeline 中的数据并非实时显示支付信息。

此项查询不包括实际争议时间,因为这是一个滞后指标,但我们纳入了一些样本指标——比如,将重试次数作为银行卡测试攻击的指标。在此项查询中,我们以简单的方式获取了一些每日指标:

- 收款量,包括去重后的数值和原始数值:例如,每天 1150 笔收款,其中 100 笔被拒付,50 笔被 Radar 拦截,涉及 1000 个支付意图。

- 授权率:在此示例中,收款的授权率为 90%,因为被拦截的支付不会转到发卡行;而支付意图的授权率为 100%,因为它们最终都重试成功了。

- 高风险和拦截率:在这种情况下,两者都是 1.6%(所有 50 笔高风险支付也都被拦截)。

- 同期支付的后瞻性争议率:例如,每 1000 笔收款中有 5 笔存在争议。随着后续争议数量的增加,每日支付的争议数量会上升;因此,我们在查询中加入了执行时间,以便追踪其变化。

如前所述,为了便于阅读,我们简化了这些查询。实际上,这种查询会涉及更多维度——例如,趋势、偏差或损失差异等。

我们还提供了一个使用窗口函数计算 30 天滚动平均值的示例。当然,也可采用更复杂的方法,比如采用百分位数而非平均值来识别长尾攻击,但在初始欺诈检测阶段,这类方法很少用到,因为趋势线比绝对精确的数值更重要。

掌握了基准数据后,您就可以开始追踪异常情况和趋势了,例如调查来自特定国家或使用特定支付方式(在我们的假设情境中,即卡片品牌“Mallory”)的欺诈或拦截率上升问题。通常,可通过管理平台中的报告或手动分析,或在 Stripe Sigma 中执行即席查询来调查异常情况。

调查

您的分析师发现了需要调查的异常情况后,下一步就是在 Stripe Sigma(或通过 Data Pipeline 在您的数据仓库)中查询数据并建立假设。您需要根据假设纳入一些细分维度,例如支付方式(银行卡使用情况)、渠道或界面(元数据)、客户(信誉)或易发生欺诈的产品(风险类别)。后续在确认阶段,您可以将这些维度称为“特征”,并将其映射至 Radar 的规则条件中。

以我们的假设为例,由“Mallory”发起的预付卡交易量庞大,且欺诈率更高。您可以将其作为分组维度,利用分析工具进行透视分析。通常在此阶段,查询需反复迭代和调整——因此建议纳入多种规则条件候选项(如假设的简化版或扩展版),并剔除次要特征,以评估其影响。例如:

|

is_rule_1a_candidate1

|

s_rule_1a_candidate1_crosscheck

|

is_rule_1a_candidate2

|

is_rule_1a_candidate3

|

event_date

|

count_total_charges

|

|---|---|---|---|---|---|

| True | False | True | True | 前 | 506 |

| False | False | True | False | 前 | 1,825 |

| True | False | True | True | 后 | 8,401 |

| False | False | True | False | 后 | 1,493 |

这种方法可以帮助您评估影响程度,从而确定优先级。该表格能够以合理置信度表明,候选规则 3 似乎能有效拦截多余恶意交易。更复杂的评估方式可基于准确率、精确率或召回率展开。您可以通过以下查询生成类似输出结果。

在此项查询中,为提升可读性,我们未进行去重处理,但纳入了争议率和早期欺诈预警率,这对于银行卡品牌监控计划非常重要。不过,这些都是滞后指标,且在此简化查询中,我们仅做回顾性追踪。此外,我们还随机选取了部分支付样本,以便对查询中发现的欺诈形式进行交叉验证和深入调查——我们后续将进一步探讨。

您可能希望将更多指标分解成直方图,以识别聚类现象,这有助于定义速率限制所需的速度规则(例如,total_charges_per customer_hourly)。

通过直方图分析识别趋势,是全面理解客户在其生命周期及转化漏斗中期望行为模式的绝佳方式。虽将其加入上述查询会显得过于复杂,但以下是一个简单示例,展示如何在不使用复杂滚动窗口逻辑的情况下进行细分(假设元数据中包含客户类型,如访客用户):

在我们的情境中,您可能不希望拦截所有来自“Mallory”的预付卡,但仍需自信地识别其他相关风险驱动因素。例如,以下速度查询或许能提升信心,避免误拦截低频访客。

一种简单的调查方法是,通过管理平台中的“相关支付”视图直接查看样本,以了解行为模式(即攻击途径或欺诈形式)及相关 Radar 风险洞察。这正是我们在上述查询中纳入随机支付样本的原因。如此一来,您还能发现尚未纳入 Stripe Sigma 的最新支付记录。更稳妥且高介入的方式则是,将您的假设建模为一条审核规则,该规则允许支付通过,但将其转交至分析师进行人工审核。部分客户采用此方式,对低于争议费用的支付考虑退款,同时拦截高于该费用的支付。

确认

展望未来,假设您发现的欺诈形式并不简单,无法通过退款和拦截欺诈客户来缓解,也不仅限于默认拦截列表范围。

在识别并确定需应对的新形式优先级后,您需要分析其可能对合法收入产生的潜在影响。这并非一个简单的优化问题,因为最优欺诈量不可能为零。在所有不同的候选模型中,您需要根据风险偏好,通过简单规模或精确率与召回率,选择最能实现平衡的模型。这一过程有时称为回测,尤其是当规则先编写、再通过您的数据进行验证时。(您也可以反向操作——即先发现模式,再编写规则。)例如,与其为每个国家单独编写一条规则,不如编写如下规则以简化确认流程:

如果 :card_funding: = 'prepaid' 且 :card_country: in @prepaid_card_countries_to_block,则进行拦截

前文“调查”章节所分享的查询示例也可作为模型使用,但需侧重不同数值——我们将在后续细化和缓解技术章节中详细讨论这一点。

Stripe Sigma 模式与 Radar 规则映射

现在,您需要将 Stripe Sigma 或 Data Pipeline 中的探索性查询转换为可用于回测的 Radar 规则映射。假设您已向 Radar 发送了正确的信号,以下是几种常见映射方式:

|

Radar 规则名称

|

Stripe Sigma 表和列

|

|---|---|

| address_zip_check |

charges.card_address_zip_check

|

| amount_in_xyz | |

| average_usd_amount_attempted_on_customer_* | |

| billing_address_country |

charges.card_address_country

|

| card_brand |

charges.card_brand

|

| card_country |

charges.card_country

|

| card_fingerprint |

charges.card_fingerprint

|

| card_funding |

charges.card_funding

|

| customer_id |

Payment intents.customer_id

|

| card_count_for_customer_* |

Payment intents.customer_id 和 charges.card_fingerprint

|

| cvc_check |

charges.card_cvc_check

|

| declined_charges_per_card_number_* | |

| declined_charges_per_*_address_* | |

| 收款账户 |

charges.destination_id——Connect 平台

|

| digital_wallet |

charges.card_tokenization_method

|

| dispute_count_on_card_number_* | |

| efw_count_on_card_* |

early fraud warning 和 charges.card_fingerprint

|

| is_3d_secure |

payment method details.card_3ds_authenticated

|

| is_3d_secure_authenticated |

payment method details.card_3ds_succeeded

|

| is_off_session |

Payment intents.setup_future_usage

|

| risk_score |

charges.outcome_risk_score

|

| risk_level |

charges.outcome_risk_level

|

最后两项 risk_level 和risk_score 与其他项不同,因为机器学习模型本身是从其他因素中推导出来的。我们建议您不要编写过于复杂的规则,而是借助 Radar 的机器学习——我们将在“利用机器学习进行细化”章节详细介绍。

新的Radar 规则属性表会针对每笔经 Radar 实际评估的交易,记录相同维度及更多指标信息,并保留 Radar 属性的准确名称。

上表展示了标准属性集,刻意省略了需根据客户旅程自定义的信号,如 Radar Sessions 或元数据。

基于上述调查,假设您得出了这样一条规则:

如果 :card_funding: = 'prepaid' 且 :card_funding: = 'mallory' 且 :amount_in_usd: > 20 且 ::CustomerType:: = 'guest' 且 :total_charges_per_customer_hourly: > 2,则进行拦截

下一步是确认该规则对实际支付交易的影响。通常情况下,您可以通过拦截规则来实现这一点。请阅读我们的 Radar 风控团队版:规则 101 指南,了解如何编写正确的规则语法。有一种测试拦截规则的简单方法,即在测试模式下创建规则,然后创建手动测试支付,以验证其是否按预期运行。

无论是拦截规则还是审核规则,均可使用 Radar 风控团队版模拟功能在 Radar 中进行回测。

使用 Radar 风控团队版模拟功能的一大优势在于,它会考虑其他现有规则的影响。规则维护不是本指南的重点,但规则的移除与更新也应成为您持续优化流程的一部分。一般来说,您所拥有的规则数量应控制在合理范围内,确保每条规则独立且可通过“检测”和“调查”阶段开发的基准查询进行监控,并能清晰回测且无副作用(例如,规则 2 仅在规则 1 过滤掉其他内容时才生效)。

您也可以通过使用审核规则(而非拦截规则)实现这一目标,我们将在下一节详细介绍。

细化和缓解

最后,在测试拦截规则后,您就可以应用模型来预防、监控或重定向欺诈。我们称之为“细化”,因为其核心在于提高整体反欺诈措施的精确性,尤其是与机器学习协同作用时。为提高精确性,您可以采用多种技术。有时,这一步也可称为“遏制”或“缓解”,它会与“确认”阶段同时进行,即不使用审核规则、A/B 测试(元数据)或模拟来评估模型,而是立即拦截可疑交易,以降低即时风险。

即使您已采取行动,仍有一些技术可用于优化您在第 1-3 步中开发的模型,从而随着时间的推移改善效果:

利用审核规则进行细化

如果您不想因误报率过高而影响收入,可以选择实施审核规则。虽然这一流程需要更多的人工操作,且可能会增加客户体验的摩擦,但审核规则可以让更多的合法交易最终继续进行。(您还可以在现有的拦截规则中添加速度规则形式的限流措施,以处理节奏较慢的交易)。使用审核规则的一个更高级的选项是 A/B 测试,这对管理审核队列中的案例总数特别有用。您可以利用支付中的元数据,在 A/B 测试时允许少量流量通过—例如,来自已知客户或仅使用随机数。我们建议将此添加到拦截规则中,而不是创建允许规则,因为允许规则会覆盖拦截规则,从而更难追踪拦截规则随时间的表现。

通过监测规则表现来优化规则

要监控规则表现,您可以检查 Payment Intents API 中的收款结果对象,尤其是规则对象。同样,在 Stripe Sigma 中,您可以使用charges.outcome_rule_id、charges.outcome_type 和 payment_intents.review_id 字段进行分析。以下是如何在 Stripe Sigma 中使用特殊的 Radar 规则决策表跟踪规则表现的示例:

利用机器学习进行细化

通常情况下,在拦截了直接攻击后,下一步就是在机器学习的基础上反复优化规则,以减少误报,从而让更多的合法流量和收入通过。

以 BIN 或 IIN(发卡行识别码)拦截为例。在银行卡测试攻击期间,您可能会暂时将某个 BIN 添加到拦截列表中,这就给了发卡行弥补漏洞的时间。不过,直接全面拦截某家发卡行可能会减少您的收入;更稳妥的做法是随着时间的推移加强审查并完善模型。现在是重新审视如何编写有效规则以及如何评估风险的好时机,尤其是 Radar 的风险得分。我们通常建议将 Radar 的机器学习与您的直觉判断相结合。与其仅凭一条规则拦截所有高风险交易,不如将风险评分与代表风险模型或情境的手动规则相结合,这样往往能在拦截恶意流量和保障收入之间取得更好的平衡。例如:

如果 :card_funding: ='prepaid' 且 :card_funding: ='mallory' 且 :card_country: in @high_risk_card_countries_to_block 且 :risk_score: > 65 且 :amount_in_usd: > 10,则拦截交易

利用 3DS 进行细化

如前所述,虽然本指南没有涵盖 3D Secure (3DS) 的广度,但您应将其视为风险缓解策略的一部分。例如,虽然责任转移可能会降低欺诈性交易的争议费用,但这些交易仍会计入银行卡监控计划,并影响您的用户体验。您可以将这一规则从“拦截所有相关收款”细化为“请求 3DS”,而不是固定金额:

如果 :card_country: in @high_risk_card_countries_to_block 或 :ip_country: in @high_risk_card_countries_to_block 或 :is_anonymous_ip: = 'true' 或 :is_disposable_email: = 'true',则请求 3DS

然后,用规则对没有成功完成身份验证或因其他原因没有提供责任转移的银行卡进行拦截:

如果(:card_country: in @high_risk_card_countries_to_block 或 :ip_country: in @high_risk_card_countries_to_block 或 :is_anonymous_ip: = 'true' 或 :is_disposable_email: = 'true') 且 :risk_score: > 65 且 :has_liability_shift: != 'true',则进行拦截

利用列表进行细化

使用默认列表或维护自定义列表,是在事件发生期间有效拦截攻击的良策,且无需承担修改规则的风险。例如,可拦截欺诈性客户、电子邮件域名或银行卡国家/地区。优化流程的一部分在于确定:哪些模式应设为规则,哪些应修改规则条件,哪些只需添加到现有列表即可发挥作用。Breakglass 规则便是事件发生期间采用权宜之计的典型案例,后续可将其细化为列表或对现有规则的修改。

反馈流程

细化还意味着您需回到第 1 步,即监控规则表现和欺诈形式。有效的监控依赖于既定的基准,以及单一、独立的变更,以确保回测的可追溯性、精确性和召回率达到最优。因此,我们建议仅在清晰、独立的部署中修改规则和条件,其他情况下则依赖列表、审核以及主动拦截和退款。

Stripe 如何提供帮助

Radar 平台版

您是正在使用Stripe Connect 的平台吗?如果是,您创建的任何规则都只适用于在平台账户上创建的支付(在 Connect 术语中,这些是目标账户收款或代收款)。

在 Connect 子账户上创建的支付须受该账户规则的约束。

Radar Terminal 版

Stripe Terminal 收款不会经过 Radar 筛查。这意味着,如果您使用 Terminal,您可以基于 IP 频率制定规则,而无需担心会拦截您的线下付款。

Stripe 专业服务

Stripe 的专业服务团队可以帮助您实施持续改进的欺诈管理流程。无论是加强现有集成,还是推出新的商业模式,我们的专家都能提供指导,帮助您在现有 Stripe 解决方案的基础上进行拓展。

结论

在本指南中,我们已经了解了如何使用 Stripe Sigma 或 Data Pipeline 来构建基线以及各种欺诈模型,这些模型代表了只有您和您的商家才能判断的攻击情境和形式。我们还展示了如何对 Radar 进行扩展和微调,使其基于您的自定义规则对更广泛的欺诈交易做出响应。

由于支付欺诈不断演变,这一过程也必须持续迭代——正如我们在检测、调查、确认、细化和缓解模型中所阐述的那样。通过快速反馈循环持续运行这些流程,您可以减少应对突发事件的时间,转而投入更多精力制定主动型欺诈策略。

您可以在这里了解有关 Radar 风控团队版的更多信息。如果您已经是 Radar 风控团队版用户,请查看管理平台中的“规则”页面,开始使用规则编写功能。

您可以在这里了解有关 Stripe Sigma 的更多信息,也可以在这里了解有关 Stripe Data Pipeline 的更多信息。