Assessing fraud risk is a continuous process of identifying attack vectors, patterns and scenarios, and mitigating them. In working closely with users, our professional services team has observed that the businesses that do this most effectively often have fraud analysts and data analysts working together – using a combination of Stripe Radar for Fraud Teams and Stripe Data products such as Stripe Sigma or Stripe Data Pipeline to identify both common fraud patterns and patterns specific to their business.

Radar for Fraud Teams helps detect and prevent fraud and gives you the ability to create a bespoke fraud setup unique to your business, with custom rules, reporting and manual reviews. Stripe Sigma is a reporting solution that makes all of your transactional, conversion and customer data available within an interactive environment in the Stripe Dashboard. Data Pipeline provides the same data, but automatically sends it to your Snowflake or Redshift data warehouse so it can be accessed alongside your other business data.

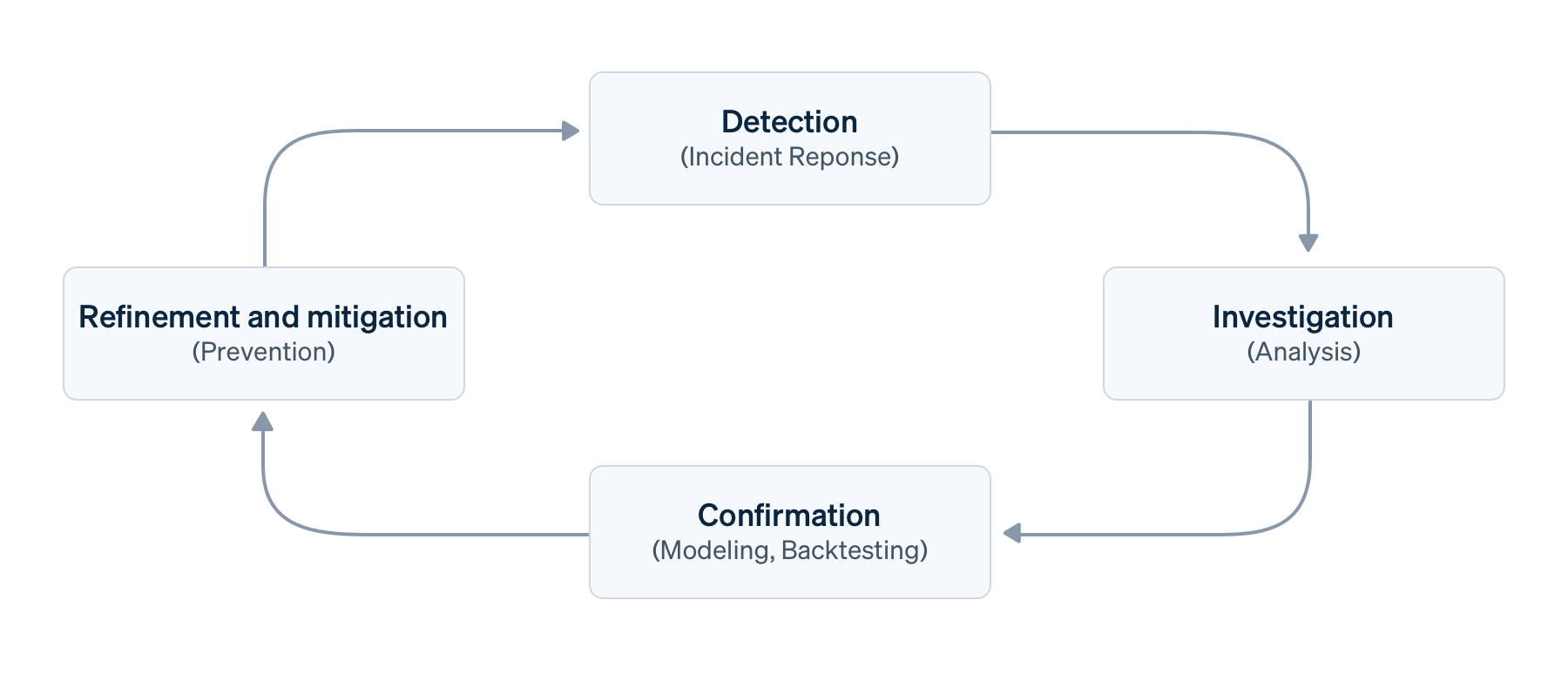

These tools work seamlessly together to cover the four pillars of an effective fraud management process: Detection, Investigation, Confirmation, and Refinement and mitigation – which we'll cover in more depth.

The value of using Radar for Fraud Teams with Stripe Sigma or Data Pipeline

The primary objective of using Radar for Fraud Teams with Stripe Sigma or Data Pipeline is to analyse Radar's data, such as metadata, alongside your own data – including pre-authorisation, user journey, conversion and session information – to separate legitimate transactions from fraudulent customer behaviour. This way, you can optimise:

- Time to insight to proactively detect and prevent fraud

- Reaction time to developing preventative and detective rules

- Cost of fraud, which includes refunds, dispute fees, customer churn, and issuer declines

Our State of online fraud report highlights the operational overhead of manual review processes and that "the larger [companies] are, the smaller the fraction of transactions they review". By automating these processes, you can free up your fraud analysts to spend more time identifying new attack vectors and developing preventative and detective rules. This means you can strike a better balance between blocking fraudulent traffic and reducing friction for legitimate customers (churn).

A basic transaction fraud management process

Let’s assume you have a basic transaction fraud management process of Detection, Investigation, Confirmation, and Refinement and mitigation that takes place within a larger risk framework.

- Detection, also known as identification, prediction or incident response, is the discovery of a data point that warrants further investigation. Detection may be manual (e.g. during an incident), semi-automated (via detective rules or monitoring against your baseline), automated (via machine learning or anomaly detection) or externally triggered (e.g. customer feedback or disputes). When it comes to detection, Radar's machine learning can automatically find common fraud patterns, while Stripe Sigma can help you find patterns specific to your business.

- Investigation, or explorative analysis, is the evaluation of suspicious payments or behaviour to better understand the business impact; this often involves verification against broader data to eliminate noise. Typically fraud analysts will use the Radar review queue or Stripe's Payments Dashboard to investigate.

- Confirmation, also called modelling or back-testing, is the generalisation of the verified fraud attack vector into dimensions and model candidates. This also covers the validation and impact assessment using past data and against other rules. Radar's back-testing and simulation functionality can help fraud analysts to do this, but data engineers can use Stripe Sigma for a wider range of scenarios.

- Refinement and mitigation, sometimes referred to as action or confinement, is the implementation of the model – mapping the dimensions and significant features onto Radar rule predicates to prevent, monitor or re-direct fraud. Traditionally, these would have been static preventative rules, but now that machine learning is an important counterpart for humans and the goal is to increase precision, Refinement is the more appropriate term. This would usually comprise block rules or lists in Radar.

A basic implementation of this process could involve iterative cycles such as daily checks, sprints or releases of a rules-based fraud detection setup. However, given that cycle times can be different and feedback loops can occur concurrently, we prefer to see this as a continuous process.

Next, we'll look at each of these four pillars in detail in the context of a hypothetical scenario and show you how Radar for Fraud Teams and Stripe Sigma or Data Pipeline can help.

Our scenario

In our hypothetical scenario, we’ll analyze behavior occurring over a period of time, as opposed to a sudden spike.

Let’s say you are an ecommerce business. You have webhook monitoring set up in your observability suite that plots various charts for payment trends in real time. You’ve noticed a steady increase in the volume of declines from a card brand called “Mallory” over the past few days—for a product that’s not usually sold in the region where this card brand is commonly used. (Note: Mallory is a fictitious card brand we’re using to bring this scenario to life; for instance, this could be a brand not on the Enhanced Issuer Network.) There are no sales promotions or other incidents that could explain this shift, so your team needs to investigate what’s happening and decide the next course of action.

Detection

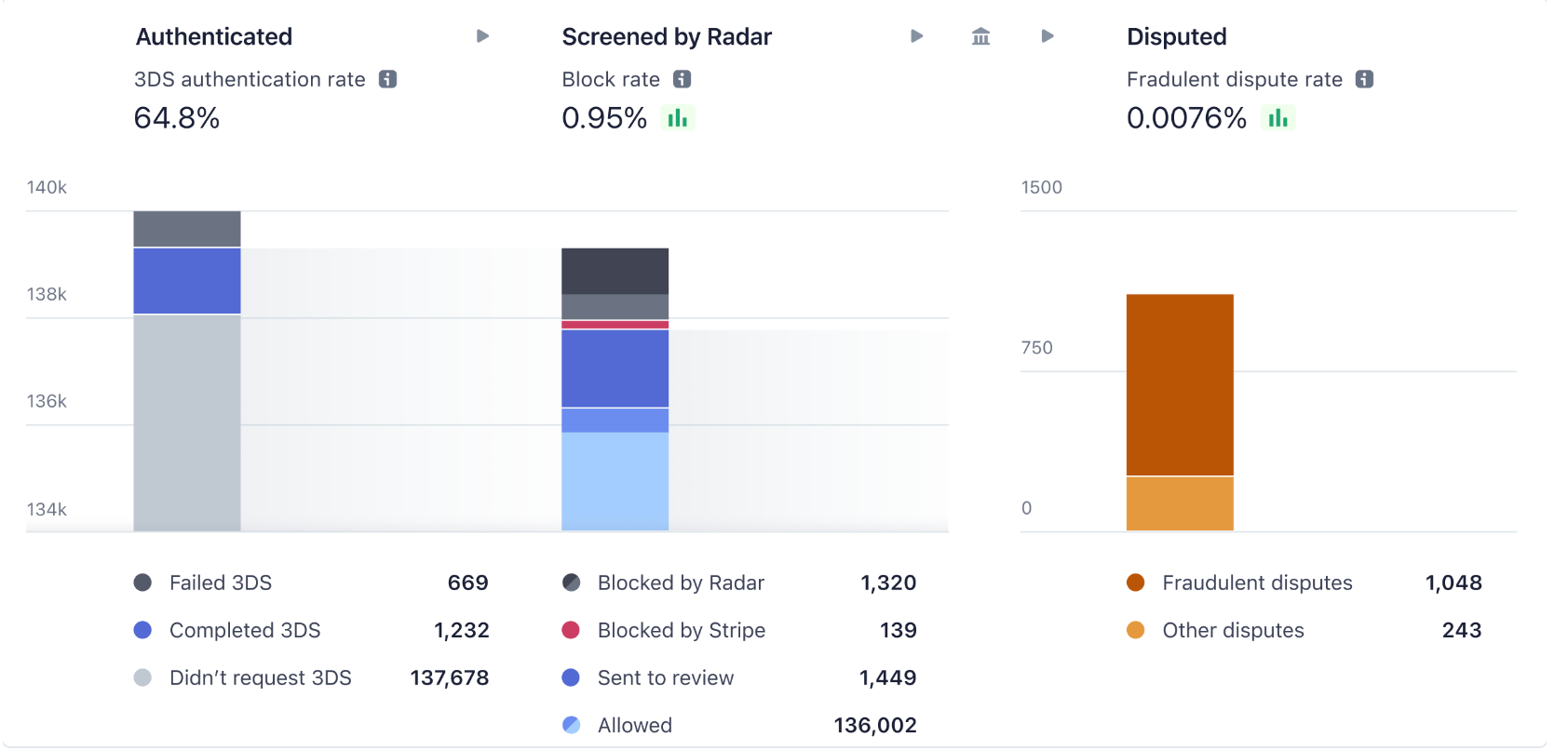

Stripe’s default rules use machine learning to predict, detect, and block a substantial number of fraudulent payments. The Radar analytics dashboard can give you a quick overview of fraud trends. And for businesses that need more control over which payments should be reviewed, allowed, or blocked, rules are a powerful tool to customize your fraud protection.

Before you can start detecting new fraud patterns, you first need to have a baseline of predictive signals, such as the performance of your existing rules. In other words, you need to know what looks "normal" for your business, or what a "good" transaction looks like. That's where your fraud analysts and data engineers work together (they may also work with DevOps teams and their observability suite). In our hypothetical scenario, an increase in declined transactions from "Mallory" card types is detected through ongoing monitoring.

Stripe Sigma tables with relevant fraud data

To detect emerging patterns, you'll first want to establish baseline performance with features such as issuer decline/authorisation rate and Radar block rate. Next, you'll want to query fraudulent disputes, early fraud warnings (Issuer Fraud Records) and payment transactions with high velocity, high issuer declines or high Radar risk scores. Ideally you'll schedule this query to run daily based on available data and have all dashboards visualised with past data, including weekly and monthly cuts, without even having to manually query Stripe Sigma or your data warehouse. This will speed up your incident response time.

Here are the most relevant tables:

|

Stripe Sigma/Data Pipeline table name

|

Description

|

|---|---|

|

Charge objects in Payments (post-Authentication raw charges, not Payment Intents)

|

|

|

Disputes or Chargebacks, including ones marked as “Fraudulent” (potentially with the addition of Early Fraud Warnings and Reviews)

|

|

|

Issuer Fraud Records sent by some schemes (note they are not always early and may not always convert to a dispute)

|

|

|

The actual Radar rules including their syntax (especially with Rule Decisions)

|

|

| Customer objects – important for deduplication and address information (e.g., cardholder name and postcode) | |

| Authentication Attempts when using 3DS to add friction | |

| Tracks the final actions that Radar takes on transactions | |

| (New) Tracks the actual rule values after evaluation per transaction |

Your fraud or business analysts should have an idea about which additional breakdown dimensions may be important to evaluate based on your business domain. The Radar Rule Attributes table in Radar for Fraud Teams has detailed information for existing transactions evaluated by Radar, but it does not go back in history before April 2023 and does not have metadata and final outcomes. Earlier on you would query these fields:

|

Breakdown dimension

|

Field in Radar Rule Attributes table

|

Field in archive schemas

|

|---|---|---|

| Card Brand, Funding or Instrument type | card_brand | |

| Wallet Use | digital_wallet |

charges.card_tokenization_method

|

| Customer or Card Country or Region | card_country |

charges.card_country

|

| Card Fingerprint (for reuse) | card_fingerprint |

charges.card_fingerprint

|

| Amount (single transaction or over time) | amount_in_usd |

charges.amount

|

| CVC, Postcode (AVS) checks per transaction | cvc_check address_zip_check | |

| Billing and Shipping Customer data, especially Postcode and Cardholder Name | shipping_address_postal_code billing_address_postal_code and similar fields |

customer.address_postal_code and similar fields

|

| Product Segment | N/A | |

| Radar Risk Score | risk_score |

charges.outcome_risk_score

|

| Transaction Outcome | N/A |

charges.outcome_network_status

|

| Decline Reason | N/A |

charges.outcome_reason

|

| Customer (individual, clustered, or segments like age of account, country or region, separate for shipping and billing) | customer |

payment_intents.customer_id

|

| Connected Account for Platforms (individual, clustered, or segments like age of account, country or region) | destination |

The new Radar Rule Attributes table tracks the same and many more dimensions for each transaction evaluated by Radar with the exact name of the Radar attributes. For instance, you can track velocity trends like name_count_for_card_weekly.

There are different ways to visualize trends, but a simple pivoted line chart per breakdown is a good way to easily compare against other factors. When you drill down in the Investigation phase, you may want to combine different breakdowns. Here’s an example table showing product segment breakdowns for the increase in declined transactions from “Mallory” card types:

|

day_utc_iso

|

product_segment

|

charge_volume

|

dispute_percent_30d_rolling

|

raw_high_risk_percent

|

|---|---|---|---|---|

| 25/08/2022 | gift_cards | 521 | 0.05% | 0.22% |

| 25/08/2022 | stationery | 209 | 0.03% | 0.12% |

| 26/08/2022 | gift_cards | 768 | 0.04% | 0.34% |

| 26/08/2022 | stationery | 156 | 0.02% | 0.14% |

| 27/08/2022 | gift_cards | 5,701 | 0.12% | 0.62% |

| 27/08/2022 | stationery | 297 | 0.03% | 0.1% |

| 28/08/2022 | gift_cards | 6,153 | 0.25% | 0.84% |

| 28/08/2022 | stationery | 231 | 0.02% | 0.13% |

And you could visualize it like this in your spreadsheet tool of choice:

Let's look closer at the example of a Stripe Sigma or Data Pipeline query to return baseline data. In the query below, you'll see daily dispute, decline, block rates, and more as separate columns. During Detection and Investigation, wide and sparse data in separate columns is often easier to visualise. It also makes it easier to map columns onto Radar predicates later. But your data scientists might prefer a tall and dense format for explorative analysis during Investigation, or machine learning in the Confirmation and Refinement and mitigation phases.

In this example, we include metadata on the payment to show a breakdown by product segment. In a wide approach, we would need similar queries for card brand ("Mallory") and Funding type, per our scenario. We deduplicate retry attempts here to focus on actual intents and get a better feeling of magnitude. We chose to deduplicate on payment intents – deeper integrations may send a field (e.g., an order ID, in metadata) to ensure deduplication across the user journey. This example shows how you can increase the precision of your anti-fraud measures by adding another factor. In our scenario this would be the product segment "gift cards". We'll come back to ways of increasing precision later in the Refinement and mitigation section.

Please note that we've simplified the queries used throughout this guide for readability. For example, we don't consider leading or lagging indicators like 3DS failures, disputes or early fraud warning creation time independently. We also don't include customer lifecycle data and other metadata like reputation or risk score across the whole conversion funnel. Also note that data freshness in Stripe Sigma and Data Pipeline does not show payments in real time.

This query doesn't include the actual dispute timing, which is a lagging indicator, but we included a few sample indicators – such as retries as an indicator of card testing. In this query, we get a few daily metrics in a simple way:

- The volume of charges, both deduplicated and raw value: For example, 1,150 charges a day, of which 100 are declined and 50 blocked by Radar, across 1,000 payment intents.

- The authorisation rate: In this example, 90% for charges, because blocked payments don't go to the issuer, and 100% for payment intents, as they all eventually successfully retried.

- The high risk and block rate: In this case, both would be 1.6% (all 50 high risks were also blocked).

- The backwards-looking dispute rate for payments from the same period: For example, 5 in 1,000 charges were disputed. The number per payment day will increase when more disputes come in; hence, we include the execution time of the query to track the change.

As noted earlier, we've simplified these queries for readability. In reality this query would have even more dimensions – for example, trends, deviations, or loss differences.

We've also included an example of a rolling average of 30 days using a window function. More complex approaches, such as looking at percentiles instead of averages to identify long tail attacks, are possible but rarely needed for initial fraud detection, as trendlines are more important than perfectly accurate numbers.

Once you understand the baseline, you can start tracking anomalies and trends to investigate, for instance, an increase in fraud or block rate from a certain country or payment method (in our hypothetical scenario, this would be the card brand "Mallory"). Anomalies are typically investigated using reports or manual analyses in the Dashboard, or ad hoc queries in Stripe Sigma.

Investigation

Once your analysts find an anomaly to investigate, the next step is to query in Stripe Sigma (or your data warehouse via Data Pipeline) to explore the data and build a hypothesis. You'll want to include some breakdown dimensions based on your hypotheses – for example, payment methods (card use), channel or surface (metadata), customer (reputation) or products (risk category) that have a tendency for fraud. Later, in the Confirmation stage, you may call those dimensions "features". These will be mapped onto Radar predicates.

Let's get back to our hypothesis that a large volume of transactions on prepaid cards by "Mallory" have higher fraud rates, which you can represent as a grouping dimension to pivot on using your analysis tool. Typically in this stage the query is iterated and tweaked – so it's a good idea to include various predicate candidates as reduced or extended versions of the hypotheses, removing minor features, to gauge their impact. For example:

|

is_rule_1a_candidate1

|

s_rule_1a_candidate1_crosscheck

|

is_rule_1a_candidate2

|

is_rule_1a_candidate3

|

event_date

|

count_total_charges

|

|---|---|---|---|---|---|

| True | False | True | True | before | 506 |

| False | False | True | False | before | 1,825 |

| True | False | True | True | after | 8,401 |

| False | False | True | False | after | 1,493 |

This approach would give you an idea of the magnitude to prioritize impact. The table tells you with reasonable confidence that rule candidate 3 seems to catch the excess malicious transactions. A more sophisticated evaluation would be based on accuracy, precision, or recall. You can create an output like this with the query below.

In this query, we removed deduplication for readability but included the dispute rate and early fraud warning rate, which are important for card brand monitoring programs. These are lagging indicators, however, and in this simplified query we only track them looking backwards. We also included arbitrary payment samples for cross-checking and deeper investigation of the patterns found in the query—we’ll talk about this more later.

You might want to break down additional metrics into a histogram to identify clusters, which can be useful to define velocity rules that you can use for rate limiting (e.g., total_charges_per customer_hourly).

Identifying trends via histogram analysis is an excellent way to understand your desired customer behavior across their whole lifecycle and conversion funnel. Adding it to the query above would be too complex, but here is a simple example of how to break this down without complex rolling window logic, assuming you have a customer type in the metadata (e.g., guest users):

In our scenario you may not want to block all prepaid cards from "Mallory", but still want to confidently identify other correlated risk drivers. This velocity query might improve confidence to, for example, avoid blocking low-frequency guest customers.

A simple way to investigate is to look at samples directly in the Dashboard via the "Related Payments" view to get an understanding of the behaviour – that is, the attack vector or fraudulent pattern – and the associated Radar risk insights. That's why we included arbitrary payment samples in the query above. This way you can also find more recent payments that are not available in Stripe Sigma yet. A more defensive and high-touch way would be to model your hypothesis as a review rule that still allows payments but directs them to your analysts for manual review. Some customers do that to consider refunding payments below the dispute fee while blocking ones above.

Confirmation

Going forward, let's assume the pattern you identified is not simple, cannot be mitigated by refunding and blocking the fraudulent customer, and does not just fall under a default block list.

After identifying and prioritising a new pattern to address, you need to analyse its potential impact on your legitimate revenue. This is not a trivial optimisation problem, because the optimal amount of fraud is non-zero. Of all the different model candidates, choose the model that represents the best trade-off for your risk appetite, either by simple magnitude or precision and recall. This process is sometimes called back-testing, especially when rules are written first and then validated against your data. (You can also do this in reverse – that is, discover patterns first and then write rules). For instance, instead of writing one rule per country, write a rule like this that makes confirmation easier:

Block if :card_funding: = 'prepaid and :card_country: in @preaid_card_countries_to_block

The query shared above in the Investigation section might also be used as a model, except different values would be emphasised – we'll talk about this more later when we get to Refinement and mitigation techniques.

Stripe Sigma schema to Radar rule mapping

It's time to translate your explorative queries from Stripe Sigma or Data Pipeline to help you map Radar rules to Stripe Sigma for back-testing. Here are a few common mappings, assuming you are sending the right signals to Radar:

|

Radar rule name

|

Stripe Sigma table and column

|

|---|---|

| address_zip_check |

charges.card_address_zip_check

|

| amount_in_xyz | |

| average_usd_amount_attempted_on_customer_* | |

| billing_address_country |

charges.card_address_country

|

| card_brand |

charges.card_brand

|

| card_country |

charges.card_country

|

| card_fingerprint |

charges.card_fingerprint

|

| card_funding |

charges.card_funding

|

| customer_id |

Payment intents.customer_id

|

| card_count_for_customer_* |

Payment intents.customer_id and charges.card_fingerprint

|

| cvc_check |

charges.card_cvc_check

|

| declined_charges_per_card_number_* | |

| declined_charges_per_*_address_* | |

| destination |

charges.destination_id for Connect Platforms

|

| digital_wallet |

charges.card_tokenization_method

|

| dispute_count_on_card_number_* | |

| efw_count_on_card_* |

early fraud warning and charges.card_fingerprint

|

| is_3d_secure |

payment method details.card_3ds_authenticated

|

| is_3d_secure_authenticated |

payment method details.card_3ds_succeeded

|

| is_off_session |

Payment intents.setup_future_usage

|

| risk_score |

charges.outcome_risk_score

|

| risk_level |

charges.outcome_risk_level

|

The last two items, risk_level and risk_score, are not like the others, as the machine learning model itself is derived from the other factors. Instead of writing overly complex rules, we recommend you lean on Radar’s machine learning—we’ll talk more about this in the Refinement using machine learning section.

The new Radar Rule Attributes table tracks the same and many more dimensions for each transaction that was actually evaluated by Radar with the exact name of the Radar attributes.

The table above shows the standard set of attributes and deliberately omits signals that you would customize for your customer journey, such as Radar Sessions or metadata.

Based on the investigation above, let’s assume you arrive at a rule that looks like this:

Block if :card_funding: = 'prepaid' and :card_funding: = 'mallory' and :amount_in_usd: > 20 and ::CustomerType:: = 'guest' and :total_charges_per_customer_hourly: > 2

The next step is to confirm the impact of this rule on your actual payments transactions. Typically you would do this with block rules. Read our Radar for Fraud Teams: Rules 101 guide for guidance on how to write the correct rule syntax. A simple way of testing a block rule is to create it in test mode and create manual test payments to validate that it works as intended.

Both block rules and review rules can be backtested in Radar using the Radar for Fraud Teams simulation feature.

One advantage of using Radar for Fraud Teams simulations is that it considers the impact of other existing rules. Maintenance of rules is not the focus of this guide, but removing and updating rules should also be part of your continuously improving process. Generally speaking, the number of rules you have should be small enough that each rule is atomic and can be monitored using the baseline queries developed in the Detection and Investigation phases, and backtested clearly, without risk of side effects (e.g., rule 2 only works because rule 1 filters something else out).

You can also do this by using review rules instead of block rules, which we’ll cover in the next section.

Refinement and mitigation

Finally, after testing your block rules, you apply the model to prevent, monitor, or reroute fraud. We call this Refinement because it's about increasing the precision of your overall anti-fraud measures, particularly in concert with machine learning. To increase precision, you may implement a variety of techniques. Sometimes this step, which may also be known as containment or mitigation, is done at the same time as Confirmation, where instead of using review rules, A/B tests (metadata), or simulations to evaluate your model, you immediately block the suspicious charges to mitigate the immediate risk.

Even if you've already taken action, there are some techniques you can use to refine the model you developed in steps 1–3 to improve results over time:

Refinement using review rules

If you don't want to risk a higher false positive rate, which may impact your revenue, you can opt for implementing review rules. While this is a more manual process and may add friction to the customer experience, review rules can allow more legitimate transactions to ultimately proceed. (You can also add throttling, in the form of velocity rules, into existing block rules for slower-paced transactions.) A more advanced option for using review rules is A/B testing, which is especially useful in managing the total number of cases in the review queue. You can leverage metadata from your payments to start allowing a small amount of traffic while A/B testing – for example, from known customers or simply using a random number. We recommend adding this to block rules instead of creating allow rules, as allow rules will override blocks and therefore make it harder to track the performance of the block rule over time.

Optimising rules by monitoring their performance

To monitor rule performance, you can check the charge outcome object in the Payment Intents API, in particular the rule object. Similarly, in Stripe Sigma you can use the charges.outcome_rule_id, charges.outcome_type and payment_intents.review_id fields for analysis. Here is an example of how to track a rule's performance in Stripe Sigma using the special Radar rule decisions table:

Refinement using machine learning

Often the next step after blocking an immediate attack is to iteratively refine the rule alongside machine learning to reduce false positives, allowing more legitimate traffic, and therefore revenue, to come through.

Take, for example, BIN or IIN (issuer identification number) blocking. During a card testing attack you may have temporarily added a BIN to a block list, which gives issuers time to fix their gaps. Blocking an issuer outright might, however, reduce your revenue; a more refined approach is to apply more scrutiny over time and refine the model. This is a good moment to revisit how to write effective rules and how to evaluate risk, in particular Radar’s risk scoring. We generally recommend combining Radar’s machine learning with your intuition. Instead of just having one rule to block all high-risk transactions, combining the score with manual rules that represent a risk model or scenario often strikes a better balance between blocking malicious traffic and allowing revenue. For example:

Block if :card_funding: = 'prepaid' and :card_funding: = 'mallory' and :card_country: in @high_risk_card_countries_to_block and :risk_score: > 65 and :amount_in_usd: > 10

Refinement using 3DS

As mentioned earlier, while this guide does not cover the breadth of 3D Secure (3DS) authentication, you should consider it as part of your risk mitigation strategy. For example, while liability shift may lower your dispute fees for fraudulent transactions, these transactions still count toward card monitoring programs—and with that your user experience. Instead of a fixed amount, you could refine this rule from “block all relevant charges” to “request 3DS”:

Request 3DS if :card_country: in @high_risk_card_countries_to_block or :ip_country: in @high_risk_card_countries_to_block or :is_anonymous_ip: = ‘true’ or :is_disposable_email: = ‘true’

And then use a rule to put a block on cards that did not authenticate successfully or for other reasons don’t provide liability shift:

Block if (:card_country: in @high_risk_card_countries_to_block or :ip_country: in @high_risk_card_countries_to_block or :is_anonymous_ip: = ‘true’ or :is_disposable_email: = ‘true’) and :risk_score: > 65 and :has_liability_shift: != ‘true’

Refinement using lists

Using the default lists or maintaining custom lists can be a very effective way to block an attack during an incident without the risk of changing rules—for instance, by blocking a fraudulent customer, email domain, or card country. Part of Refinement is deciding which patterns should be a rule, which should change a rule predicate, and which can simply add value to an existing list. Breakglass rules are a good example of a stopgap during an incident that might afterwards be refined into either a list or a change to an existing rule.

Feedback process

Refinement also means you return to step 1—that is, monitoring your rule performance and fraud patterns. Good monitoring depends on established baselines and single, atomic changes for optimal traceability, precision, and recall of backtesting. For this reason, we recommend only changing rules and predicates in clear, distinct deployments and otherwise relying on lists, reviews, and proactive blocking and refunding.

How Stripe can help

Radar for platforms

Are you a platform using Stripe Connect? If so, any rules you create only apply to payments created on the platform account (in Connect terms, these are destination or on-behalf-of

charges). Payments created directly on the Connected account are subject to that account’s rules.

Radar for Terminal

Stripe Terminal charges aren’t screened by Radar. This means that if you use Terminal, you can write rules based on IP frequency without worrying about blocking your in-person payments.

Stripe professional services

Stripe’s professional services team can help you implement a continuously improving fraud management process. From strengthening your current integration to launching new business models, our experts provide guidance to help you build on your existing Stripe solution.

Conclusion

In this guide, we've seen how Stripe Sigma or Data Pipeline can be used to build both a baseline as well as various fraud models that represent attack scenarios and patterns that only you and your business can judge. We've also shown how you can extend and fine-tune Radar to react to a wider range of fraudulent transactions based on your custom rules.

Because payments fraud is continuously evolving, this process must also continuously evolve in order to keep up – as we outlined in our Detection, Investigation, Confirmation, and Refinement and mitigation model. Running these cycles on an ongoing basis with a fast feedback loop should enable you to spend less time reacting to incidents and more time developing a proactive fraud strategy.

You can learn more about Radar for Fraud Teams here. If you're already a Radar for Fraud Teams user, check out the Rules page in your Dashboard to get started with rule writing.

You can learn more about Stripe Sigma here and about Stripe Data Pipeline here.