With voice cloning, Descript takes the next step in AI podcast and video editing

Jay LeBoeuf of Descript talks about how the company uses AI to make editing audio and video as easy as editing a text document. This includes Overdub, Descript's new voice cloning feature.

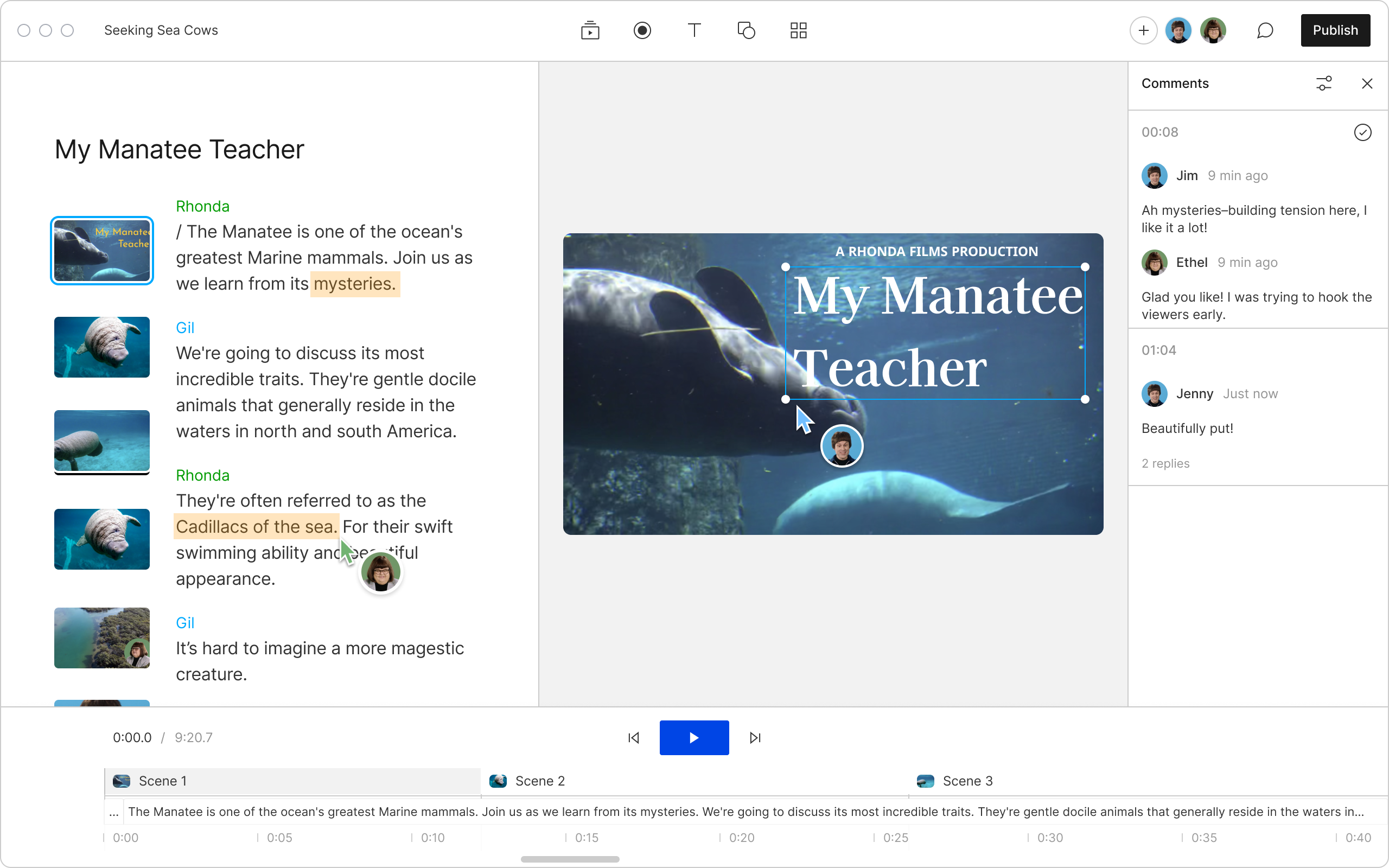

Traditional audio and video editing software, with its dozens of tools and panels, can take months to learn and years to master. The startup Descript launched in 2017 with a simple but ambitious idea: What if you could edit footage just by editing text? And better yet, what if the text was from a transcript that your editing app created automatically?

Using generative AI and language processing, Descript gives everyday creators the power to cut together professional-quality content themselves. Audio or video files are automatically transcribed into a text document; users then cut, paste, and delete text, and the corresponding audio or video automatically follows suit.

Stripe spoke to Jay LeBoeuf, Descript’s head of business and corporate development, and a veteran in the field of speech and sound recognition. We asked him about how the company balances AI’s creative potential with its risks, about its voice-clone feature called Overdub, and about how the company has benefited from working with Stripe. The interview, which we transcribed on Descript, has been edited and condensed for clarity.

What gave you all the idea to make audio and video editing basically like editing a Word doc?

People are natural storytellers, and we all can rally around words and writing as our way of capturing ideas. Text is something that's just very familiar, whether you’re just getting started and you have no idea what a waveform is, or you’re a professional and know exactly how you’d like to restructure the story.

What sets your product apart from other transcription technology?

We’ve layered some special sauce on top of our technology that makes the edits seamless. For one thing, Descript perfectly aligns the transcript to your audio so that all the edits you make are exactly where you want them. And cuts are basically undetectable. For example, if there’s a word that I said, or a sentence that I said, and you wanted to snip it out using Descript, there won’t be a gap—it's not going to sound like I took a breath in the middle of the sentence. It won't sound like a poorly edited jump cut, either. It's all just going to work as if you had a skilled editor going in and doing the hard work.

This all involves advanced technology, but you barely know it’s there. In a typical Descript video editing experience, there are 11 times that you'll encounter AI without even knowing that AI has touched your creation.

Wow. Like what?

So, we’re recording this interview. Now, let’s imagine that afterwards, you take the file and drag it into Descript. This is the first instance of AI kicking in, where all of the words in the file get transcribed and appear as text. Then we have AI that will do speaker detection. So Descript will identify when you’re talking and when I’m talking.

Our AI can also automatically improve the sound quality of the recording. So, I have a decent mic, but a lot of other people are in acoustic environments that don't sound professional. So we developed a technology called Studio Sound that goes in and makes every person sound like they're in an NPR-quality broadcast environment.

It also does natural language processing. So all of the “ums” and “ahs,” and other filler words that are getting in the way of me telling my story, can just be snipped out with one button press.

A screenshot from the Descript app.

Sometimes filler words or long pauses can add texture to a piece of audio or video. Like a dramatic pause. Can your technology differentiate between meaningful pauses and extraneous ums?

Absolutely. We understand that filler words and pauses can help with credibility, authenticity, and drama. So, while we have a one-click removal tool, we also allow users to apply changes to individual instances if they prefer. We like to think of AI as a workflow tool in the hands of a skilled storyteller.

Can you talk about how Descript is incorporating the ability of AI to actually generate novel language?

We have a voice technology called Overdub. It allows anybody to clone their own voice—and only their own voice.

So, let’s say I’m the host of a podcast. I create a draft of an episode, but then realize I’ve made some mistakes. Let’s say I call a guest Sam instead of Henry by mistake. Well, I’ve created my own Jay voice clone that I can use to correct that. All it took was ten minutes of speaking into a mic to give Overdub enough training material. I double-click the word Henry and type in Sam, and Overdub will synthesize me in the same acoustic environment saying the correct name.

Overdub is very popular with our business users, particularly product marketing teams.

Why is that?

Let’s say you frequently need to update product names, or instructions about where to find something. You can select what you need to fix and retype it instead of rerecording each time. Or let’s say you’re the voice of a product demo and you realize you need to add a call to action, explaining what users can do to learn more. You can just type in entire sentences and Overdub will voiceover them for you.

What if someone tries to clone my voice without my consent?

If you create your Overdub voice, you have to not only provide us with training material of what you sound like, but you have to read a consent statement that we prompt you for, live. We take that consent statement and it's matched both algorithmically with a voice fingerprint and by a team of humans with headphones to make sure that you really are present, and that your training material matches your consent.

Overdub, a voice-clone feature of Descript, allows users to create a text-to-speech model of their voice, or use ultra-realistic stock voices.

Can you talk about your relationship with Stripe?

We use a number of Stripe products in concert with each other—Stripe’s payments platform, Billing, Radar, Sigma, and Revenue Recognition. It’s been very helpful to consolidate processing, subscriptions, invoicing, and recognition in one place. We save on cost, but it also means reducing complexity—there’s less engineering required on our end to integrate systems together. Stripe is an extraordinarily developer-friendly partner.

What are some ways Stripe has been developer friendly?

The API documentation is the gold standard, for one. It’s clear from stuff like including test keys in code examples that Stripe cares about making the API easy to integrate.

You’re also responsive. We were part of the beta test for Revenue Recognition and had several meetings with the product and billing teams in which they took time to walk us through changes. Or with something like testing webhooks, there have been several improvements made during our time integrating with Stripe. You’re always working at making the experience better.

Will Descript eventually be able to use large language models to suggest actual content that could then be created with Overdub?

We recently announced an integration with ChatGPT-4 that will be available soon. Now, what will that look like? What you’ve mentioned is one possibility—it’s something users are telling us they’d like. It's great to have OpenAI as a partner, and I think everybody’s going to be floored by what they see us come out with this year.